Soft Skills 2021

Increase Your Data Quality Using AI

More and more businesses are integrating machine learning algorithms to automatize manufacturing and reduce costs. To train neural networks capable of producing sufficient quality so that the result could be considered acceptable and models could be integrated into business processes, high-quality data is required. This can be achieved by selecting a training dataset and checking its quality.

But unfortunately, this is not the only problem of machine learning. Systems trained on high-quality images provide good results only if images that go through them in production are of high quality too, but, just like in the case of a training dataset, production images rarely meet these requirements. Not so long ago a group of data scientists proposed a solution — Super-Resolution Generative Adversarial Networks (SRGAN) to increase quality of pictures before putting them into the network for processing.

The main difference between SRGAN and a regular GAN (generative adversarial network) is the use of more complex generator and discriminator architectures. Super-Resolution Generative Adversarial networks use deep convolutional networks because only they are able to achieve high accuracy when working with images.

![Picture 1 — SRGAN architechture [1]](/images/blogs/big/24600.jpg)

The generator is based on Residual CNN, the effectiveness of which has been proven by the networks of the ResNet family. This kind of architecture solves the problem of loss of information with a large number of network layers. The uniqueness of the residual network lies in the fact that it uses fast connections.

Input information of the block is duplicated by two parts, the first part is sequentially passed through the layers of the block, and the second remains untouched. Before using the activation function, both parts are summed element by element. The given mechanism seems to be very simple from a mathematical point of view but ultimately helps the network to achieve higher quality.

![Picture 2 — Residual block [2]](/images/blogs/big/24601.jpg)

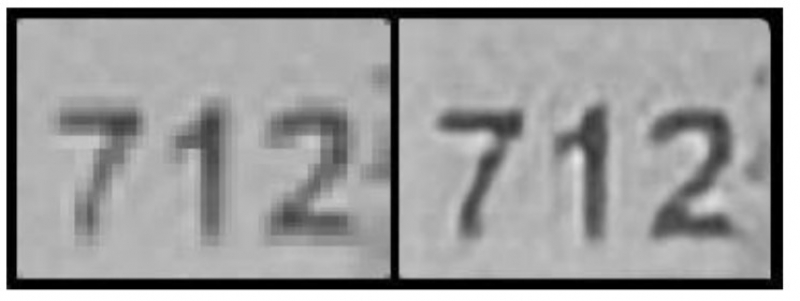

Another unique component of the generator network is the layer for image enlarging (Pixel Shuffle layer). It transforms the data so that the number of channels would decrease, but the height and width of the image would increase. For example, let's take a look at the tensor of size (N, L, H, W), where N is a number of pictures, L — number of channels, H — height, W — width. The layer will return another tensor with shape (N, C, H * U, W * U), where U is the image magnification parameter, and C is the quotient of the square of L and U.

The network trains by passing original and reduced with bicubic interpolation images through the network. As a result, the SRGAN network has to learn to generate high-resolution images from low-resolution images.

In picture 4 you can see the result of my own implementation of SRGAN.

This absolutely says a lot about the possibility of image quality increasing using artificial intelligence. The network is not perfect yet, but the community is working on it. A new modification of the Super-Resolution Generative Network is already proposed, called ESRGAN where E stands for enhanced. I strongly believe that in less than five years we can fully find the best solution for the image quality problem and this network is just the beginning of something incredible we can not even imagine.

Written by Evgenii Gutin

References

1. Christian Ledig, Lucas Theis, Ferenc Huszar. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. arxiv.org/pdf/1609.4 802.pdf

2. Stanislav Litvinov. ResNet (34, 50, 101): "Residual" CNN for image classification. neurohive.io/ru/vidy-nejrosetej/resnet34−50−101