The “Open Data of the Russian Federation” competition was held for the third time. This competition, organized by federal ministries, services and agencies, venture capital investors, large innovative companies, the community of data experts and other non-profit organizations, aims to establish communication between the overseers of state websites and services, and those who use open data. Competition’s participants were tasked with finding out more about what information is required of those who needed, the development of modern digital services, and also shaping their vision of the development of state information policy.

A large amount of open data is published by public authorities, but it is not so easy to access and use effectively. To do so, professionals have to solve certain practical problems, Dmitry Muromtsev explains. Dr. Muromtsev is the head of the Department of Informatics and Applied Mathematics, and head of the International Laboratory of Information Science and Semantic Technologies at ITMO University. He was also a member of the Hackathon on Open Data jury.

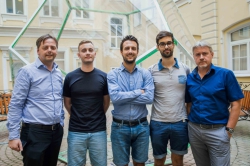

As part of this competition, different events are held all around the country. In St. Petersburg, last weekend, the Open Data Hackathon ran for 2 days and was attended by students and graduates of universities from Moscow and St. Petersburg. The Hackathon was organized by ITMO University, the Open Government Initiative, and the European University’s Institute for the Rule of Law.

Participants of the competition either formed teams of 3 to 5 people, or participated as individuals. They had to have experience in Linked Data, Artificial Intelligence and Machine Learning. Altogether 5 teams participated in the challenge. Over the two days, the teams had to come up with the most creative solutions for the cases provided in order to win the prize money of 100,000 rubles.

The teams looked for practical solutions to existing issues faced by Russian government agencies. For example, in 2016, the Russian government announced an upcoming reform to its supervisory system. One of its priorities is the automatization of supervision of businesses and enterprises. A core idea of this initiative is the transition from a system of all-encompassing inspections towards a more risk-based selection. Optimizing the system to focus on medium or high risk companies requires a technical solution which can be developed with the help of machine learning.

Before the hackathon started, participants received a data array containing the list of more than 60,000 venues and facilities across 25 regions of the country, all of which were slated for inspection in 2018. The teams’ objective was to use this training sample to predict the risk category of each enterprise and to understand and explain to the jury the anomalies and patterns observed in the assignation of categories.

“A special complex analytical task was prepared for the Hackathon, which could be solved using machine learning technology based on open data. One of the main partners, the European University’s Institute for the Rule of Law, based on data from RosTrud, prepared a sample of the data. But, this data was insufficient and had to be combined with open data from other sources and registers”, shared Dmitry Muromtsev.

Do children’s camps and nuclear power plants have the same level of risk? Which regions and agencies are more accurate in their definitions of various risk categories? And why are inspection procedures in some regions different to those in neighboring areas? Participants of the hackathon had to not only use the initial dataset to answer these questions, but also study the subject area to identify what kinds of additional information would help to achieve the best result.

“We had to study a lot of additional information related to the subject area; we often had to untangle numerous existing inconsistencies that occur on state authorities resources. In the course of our work we tried different machine learning methods; we were interested in using the algorithms in order to eventually come to understand what results can be obtained at the output. Interestingly enough, we checked many possible solutions but the one that worked was the simplest of all”, said one of the participants from the St. Petersburg Academic University team.

While the task was quite specific, besides the formal requirements, the participants of the Hackathon had to decide and suggest what technology should be used to analyze periodically-published open data in order to solve complex analytic problems, added Dmitry Muromtsev. He noted that the results, which were obtained through the use of machine learning methods, as well as the curious patterns, can help with optimizing the work of a number of government agencies in the future.

“Looking for interesting connections between different sources of data can result in the development of recommendations that can help to create connections between different sources. For example, such sources could be two federal state authorities. If the technique demonstrates a high performance, these machine learning algorithms may be included in the analytical systems of the relevant departments which are then able, based on this data, to change the approach to their work; in this case, with supervisory inspections, for example, optimizing the amount of inspections will make them more meaningful and send them to the right places where there are actual problems”, explained Dmitry Muromtsev.

Moreover, as explained by the participants of the Hackathon, this two-day event made it possible to identify several problems that still prevent open data from being used in the most effective way possible. In particular, the resources of the public authorities today are not consistent when it comes to data; as such, information often has to be dealt with manually. Often, information is duplicated and the same data is found several times, in slightly different iterations. As such, in the future, it’s important to introduce common and clear standards for data, which will make it easier to analyze data while reducing costs of specific tasks.

Throughout the whole process, the teams were able to assess the effectiveness of their proposed models and track their position on an online leaderboard. As a result of the final decision of the jury, which also took into account objective data in the table, the winners were the team from St. Petersburg Academic University who won the grand prize of 100,000 rubles.