What is emotional AI?

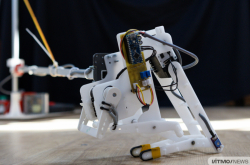

There are three areas of research: first, we detect and recognize human and animal emotions, then we interpret them with the use of machine learning and big data analysis, and finally synthesize emotions in robotic systems. The simplest examples are humanoid robots, but we also synthesize emotions in chatbots and virtual assistants. Emotional AI makes users’ interaction with the system more enjoyable.

In one of your lectures, you mentioned collecting biological data for emotional AI and using bioneuro detectors to transmit neuro data. What biological data do you need to collect in order to see people’s emotions? Pulse and blood pressure?

Pulse, blood pressure, heart rhythms, brain activity: all these things are collectively called multimodal data, which means that there are several sources of information. Multimodal data enhances the quality of emotions interpretation, as different types of perception are responsible for different emotions. For example, visual perception is responsible for fear responses. It sometimes happens that there is no real danger but your brain overinterprets something and assumes that there is a risk. In fact, people often suffer negative emotions just because they can’t control themselves and don’t know how to manage their stress. Also, there is a concept called biological feedback. It helps us visualize biological data and, thus, provide people with a method of controlling these data.

Is it really possible to control your pulse, for example?

Yes, it is possible. When your heart rate goes up, you need to concentrate on lowering it. Visualization helps your brain to adapt quicker. Biological feedback techniques are also used to fight insomnia: calm music helps you fall asleep.

Сan you really detect people’s emotions from their biodata?

Yes, there is a classification of emotions recognized worldwide made up of eight basic emotions. And there are many research on how to detect, control and synthesize these basic emotions.

So, there are new research areas emerging that focus on the relationship between emotions and biological data?

First of all, there is the already existing cognitive science which includes the concept of emotional intelligence. But it was first based on psychology and thus was very subjective. Now when we have new technologies for collecting biodata, we have tools that allow us to analyze a person's condition more accurately. This is neurophysiology, a new branch of cognitive science.

How much data have you collected on the connection between biological data and emotions so far?

We have average data but we are not happy with that. In Western countries, they want to make these data more personalized, as we are all different, everybody has their own biorhythms: some people are early birds while others are clearly night owls. The more data about yourself you collect and analyze, the better you can control your behavior and the more productive you can be.

So, let’s suppose that I’m sitting at the office and my biodata device says that my efficiency level is very low at the moment. Does it mean that I’d better drink a cup of tea?

Everyone has periods of concentration and relaxation. Google has long been offering their employees to take part in corporate meditation programs. The company understands that employers are much more productive when their work environment is relaxed and comfortable.

You have already given an example about the moderator of a meeting seeing that a person’s concentration level is very low and removing them from the discussion. Is it fair? Doesn’t that mean depriving people of their privacy?

I was talking about work, that's all. If the moderator of a discussion sees that some members have a concentration level below the threshold, this means they are not productive. You won’t get anything from them. You can concentrate on those ready to work instead. This saves time. Or some people can be night owls by nature and they simply don’t feel productive at 9am. That’s why many IT companies offer their employees flextime.

You’ve also touched on the topic of robots simulating emotions. It is widely believed that emotions and meaningful interactions are something that humans will always be better at than robots. Experts say that workers with good communication skills will be the most sought-after as the labor market changes. But if we teach robots to perform these tasks, then people will lose this advantage?

According to Alexey Potapov, professor of the ITMO University’s Department of Computational Photonics and Videomatics, AI in its current state is limited and weak, and we’ll see the real thing only in 15-20 years. Japan is the absolute leader in the field of emotional AI. For instance, it is actively used in elderly care: caretakers are humans, they get tired, distracted, they need rest. But a robot doesn’t.

In Japan, humanoid robots are evolving very rapidly. Robots are in demand there as Japan is a closed country and many people find it easier to communicate with a system, rather than with each other. However, creating humanoid robots inevitably leads to some ethical dilemmas, for example, one of human-robot relationships. There’s a recent Youtube video where a human is beating up a robot. After much uproar, it turned out that this was an experiment conducted by Japanese researchers who wanted to see people’s reactions and analyze their thoughts on how robots should be treated. Should we see robots as equal beings?

This question of the ethical dilemmas and risks associated with emotional AI is very pertinent. To some people, over-communicating with artificial intelligence units may cause some sort of a shock or trauma, as demonstrated in the movie ‘Her’, for example. What are your views on the matter?

It’s important to test different kinds of emotional AI in different sociocultural groups and countries. You can’t just take a robot made for a Japanese audience and expect it to click with other Asian societies, let alone with those in the US and Europe. Moreover, people from different age groups experience AI differently, so more research is needed. There is an ongoing research on using humanoid robots in education. Scientists from the Kurchatov National Research Center, led by Neurocognitive Technologies Department head Artemiy Kotov, have already created a robot for teaching Programming in schools. School students took part in the project: they wrote a program which ‘cloned’ their teacher by mimicking the way she spoke and interacted. So what the kids saw was a robot, but what they were hearing was their teacher. How could we interpret their complex perceptions? As of now, there’s little research in this area. Small group robotic systems trials just don’t give enough evidence to advance it.

ITMO University has recently held a conference on emotional AI, which was attended by many leading specialists in the field. Which market trends in the field of AI should we be on the look-out for? What problems are AI developers currently working on?

The field of emotion detection and recognition technologies is pretty well mapped-out, we just need to keep on expanding and improving on our toolbox. What’s more topical is the question of analyzing and interpreting the collected multimodal data. And I don’t just mean human emotions; animals are sentient beings too. For example, if a cow experiences stress, it will give less milk. We’ve already spoken about workers efficiency; livestock is a whole another economic concern. We also need more tools for collecting big data, as they form a foundation for developing high-quality multimodal technologies. Special platform solutions are being created to advance this cause; one of the leading Russian companies in this field is Neurodata Lab LLC. Although it is based in Moscow, it has already branched out to the US and European markets. This shows that emotional AI is in high-demand all over the world. Another newest research area is niche machine learning technologies that are used in specific fields such as speech recognition or human behavior analysis.

Is Russia up there with the world leaders of emotional AI technologies development?

If we take a look at the number of relevant technologies patented per country, the US is the leader in the field. Moreover, the number of patented emotional AI technologies grows exponentially, and markets with such growth rates look really attractive to investors. So other countries should look into this when they decide the development of which technologies they should encourage. For example, in Russia, the ‘Robopravo’ research center publishes monthly digests on trends in the development of robotic systems, AI, and related technologies.

Is emotional AI being researched at ITMO University?

ITMO University already offers a Bachelor’s program in Neurotechnologies and Programming, and this year we’re launching a new Master’s program in Neurotechnologies and Software Engineering (available in Russian). In addition, we’ve developed a learning trajectory for school students which initiates them into Neurotechnologies even before they come to ITMO. We also plan to create a similar retraining program for adults. Nearly 5,000 job options disappear off the labor market each year due to them becoming obsolete, so opening people to new high-in-demand careers is very important.

Moreover, ITMO University has recently won the Ministry of Education and Science’s National Technology Initiative grant in the field of cognitive technologies and machine learning, which will support our research projects. We hope that both our academic staff and our students will join these endeavors.