Soft Skills 2021

New Apple M1 Chip: Is RISC Worth It?

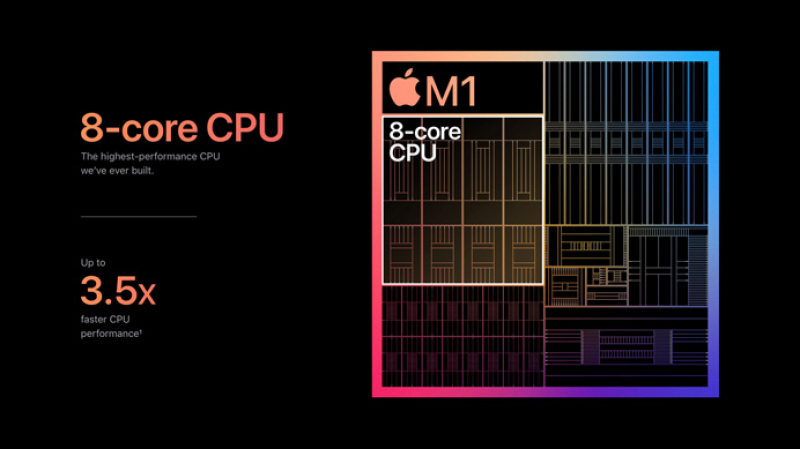

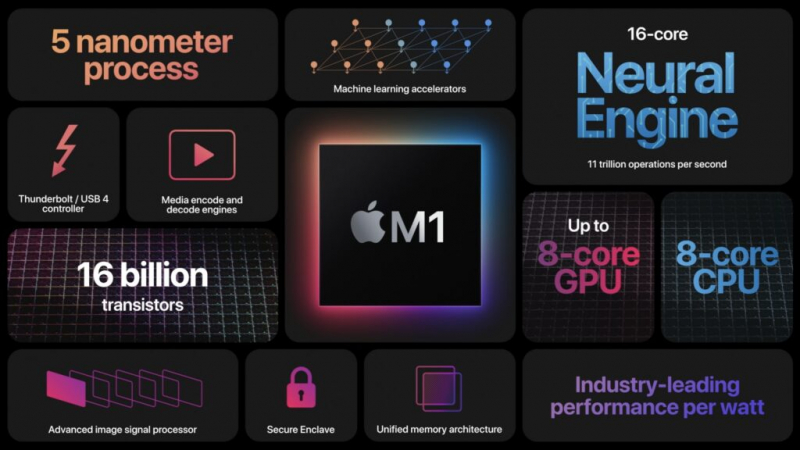

On November 10, 2020, Apple introduced M1, the new ARM-based chip. We all know ARM CPU architecture is dominating the mobile market and Apple already has a powerful line of SoC processors called Apple Silicon (e.g. A14, A13, and so on). So, what is so new about this one? Well, this chip is for the new MacBook (though the previous MacBooks which used x86−64 CPU architecture). At this moment things become much more interesting. Servers typically use a variety of architectures including POWER, ARM, amd64 (x86−64), IA-64, and so on. On the other hand, the x86−64 architecture completely dominates the desktop segment while low-power devices such as smartphones use ARM (however you can find amd64-based tablets in stores). Can Apple change the balance by bringing the ARM-powered laptop into the market?

History of RISC concept

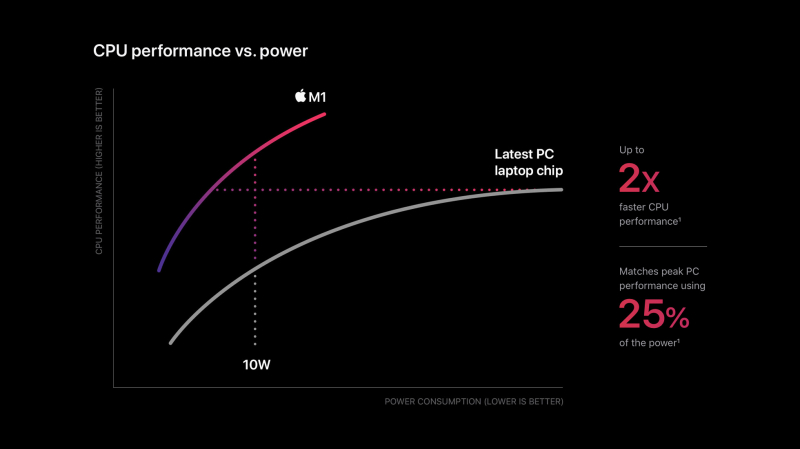

RISC stands for reduced instruction set computer. The whole idea was coined in the 80s by research institutions (Berkley and Stanford). Keep in mind that there are a lot of different architectures under the RISC label e.g., ARM, SPARC, RISC-V. Don’t be misled by the name, however, for at least today reduced does not mean a small instruction set, but instead implies that the CPU can handle a single instruction with a few or even one memory cycle (unlike Complex ISC). Erik Engheim wrote quite a good article explaining the difference between CISC and RISC. Another article which is more user-friendly may be found here. Instruction sets may contain very sophisticated operations. In RISC architecture, memory access and arithmetic operations are divided into two distinct categories (the so-called load-store architecture), unlike CISC with register-memory architecture. In the first approach, CPU can do arithmetic operations only between registers, while in the second approach CPU can do operations between register and memory. Thus, RISC CPUs have simpler internal design: fixed-length instructions, a relatively simple pipeline, a decoder, etc. The less transistors you have the lower the energy consumption, heat, and so on. On the other hand, you can spend "extra" transistors on registers, arithmetic units, GPU, or CPU cores. According to Wikipedia, "the focus on "reduced instructions" led to the resulting machine being called a "reduced instruction set computer" (RISC). The goal was to make instructions so simple that they could easily be pipelined, in order to achieve a single clock throughput at high frequencies".

RISC vs. CISC

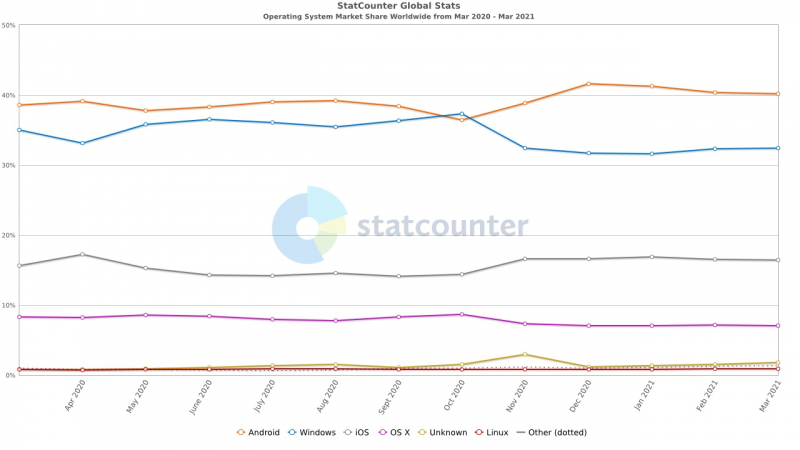

An obvious question comes to mind: if RISC is dominating mobile and embedded devices and if even supercomputers use ARM architecture, why do we still use amd64 on desktops? Considering that the new MacBook is not the first ARM-based laptop, is it all about legacy?

There are two different questions we have to ask. First, can RISC and ARM exactly beat amd64 on desktop as architectures? Second, can Apple not only compete in the laptop market with the brand new chip but also bring the new architecture on desktop outside their own ecosystem?

Answering the first question, we have to keep in mind that today CISC is represented almost exclusively by x86−64 which is Intel IP while RISC is produced by a variety of different ISAs (instruction set architectures). If we look at the history of these architectures, we will see that one camp easily (or not) took the ideas from another and today the difference between these two philosophies is a little bit blurry. Here is an extensive (though old) article by Paul DeMone about why this difference still matters. In a nutshell, RISC came with its own killer-feature: the pipeline. CISC folks also wanted this feature due to astonishing performance improvements but the variable-length opcodes poorly combined with such an idea. So engineers came up with the solution to split one huge instruction into several little ones. After this, they designed the so-called hyper-threading or SMT to get more from pipelines. Of course, a RISC-based ISA liked this idea and incorporated it, too.

Some people say that now this is almost game over for AMD and Intel. Or, at least, these two big corporations will face hard times, especially Intel. Architectural downsides come from the upsides. CISC is relatively old and has problems with variable-length instructions which makes things much more complicated. Also x86−64 is Intel’s property and it is not easy to start manufacturing compatible chips. On the other hand, RISC is suffering from code density, which means that for the same operation RISC-based CPU needs more instructions. More instructions means more memory consumption. Yes, engineers handle this problem with more registers and instruction packing but nothing comes for free. More registers means a much more complicated task for a compiler because to achieve high performance it has to use as many registers as possible in an optimal way. This task is NP-complete. Luckily there are suboptimal ways to do this. However, RISC is relying much more on compilers. Moreover there are still no single RISC-based CPUs that can compete with current desktop CPUs.

To summarize: things are definitely changing. The first manufacturer (Nvidia?) who will bring a decent CPU will be the new king of the market. My favourite story is that Intel was a leader in the desktop amd64 race but with AMD’s Ryzen series being released now Intel has to chase its old competitor. Only with one CPU series AMD took the leadership both on desktop and laptop markets. But the transition for Intel will be relatively slow and painful if there will be one be at all.

Answering the second question, I have no expertise to doubt Apple's decision. It was a precisely calculated step. Now that the series processors are unified, there will be no problems with Intel. Looks like a win. Also, Apple had experience with a non-x86 ISA. However the new MacBook’s performance is not so great and there are plenty of problems with hardware and software compatibility, multi-monitor setup, controllers, and RAM capacity. Considering how much money was invested in amd64 and Intel in particular I suppose that if Apple and other major companies will keep their research and development, M1 and ARM have a bright future.

Good ol' desktop

In the 2000s, personal computers were the jack of all trades. People used them for everything. In the 2010s, smartphones and tablets had come and completely changed the environment. Now you can do all your usual tasks with a phone or a tablet. Like video killed the radio star, smartphones "killed" PCs. Now personal computers are used primarily for gaming or compute-intensive tasks like video/audio processing. And again, for the first task PCs compete with consoles and for the second task they compete with cloud solutions. In 2020, Nvidia bought Arm. And here comes the question: what for? For desktop solutions? Or for servers, clouds, GPU, and AI? I believe it is the second. Today, desktops are not so attractive for major players like Microcsoft, Apple, and Nvidia. Why buy a computer if you can go for a cloud? Or you can go for an ARM-based tablet or even for a laptop. Unlike 20 years ago, Intel competes not only with AMD, but also with Apple, Google, ASUS, Samsung, and even Amazon with their clouds. They all provide solutions for your problems. The market has dramatically changed. However, I suppose there always will be room for a high-performance home workstation but what will it be? Only the future can tell us, if we end up with AMD and Intel remaining the two main competitors or if new players enter the market. Or will they fall into oblivion just like IBM who focused on mainframes? Time will tell, but for now investing in ARM architecture definitely seems worth it.

Written by Egor Masin