The age of nanotechnology

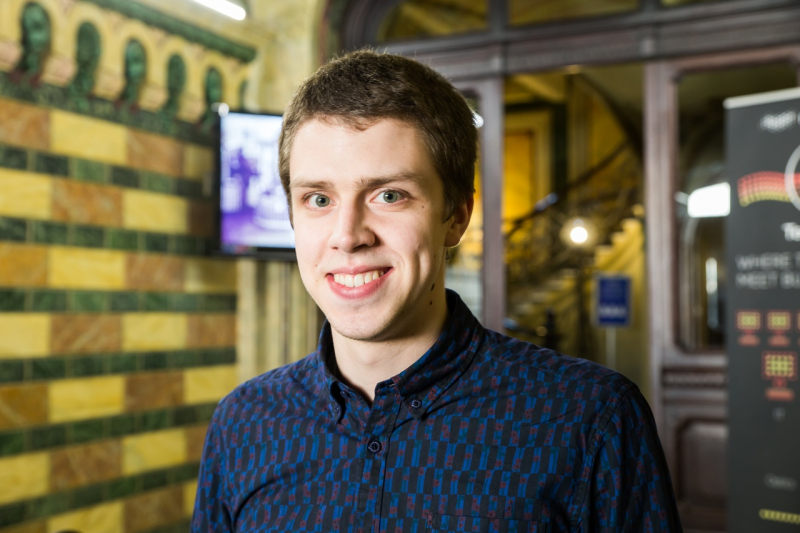

George Zograf spoke about the achievements of ITMO University’s Laboratory of Hybrid Nanophotonics and Optoelectronics, of which he is a staff member: about special coils that reduce the length of MRI scans by three times and about high-efficiency solar panels. He also explained that researchers at the laboratory experiment with projects at the intersection of physics, nanophotonics, and biology, such as those focused on targeted drug delivery. Some of the projects even resemble concepts straight out of the Avengers movies.

“For instance, we have a researcher (Filipp Komissarenko) who knows how to move nanoparticles and modify structural networks quite literally by hand – with nanotweezers. This isn’t on the atomic scale, of course (nanoparticles are dozens of times larger), but still incredibly cool. It’s not Tony Stark’s robotic suit that transforms on the molecular scale, but pretty close,” says George Zograf.

Simply put, nanotechnologies are now practically everywhere, which is why we should see them not as a science, but a tool, notes the researcher. Just like the stone, bronze, and iron ages, today’s era could rightfully be considered the nanotechnology age.

As for these technologies’ level of development, it’s worth noting that even today, people are already using them for entertainment – just take the world’s first nanocar race. But things shown in the movies, such as nano-scale bots that travel inside the human body and regenerate damaged tissue, don’t exist and probably never will.

Should we fear the further development of these technologies? The question of ethics remains open. The field has been active for only the past 20 to 30 years, but where will it be in 50, 100 years? How will nanotechnologies affect our bodies in the long run? After all, these microscopic particles and structures are able to pass through living tissue and cells, accumulate in the human body, and may have a harmful effect on our health.

There is also the issue of using such powerful technology; according to George Zograf, the situation is similar to what happened after the discovery of the nuclear reaction. On the one hand, nuclear technology could be a powerful energy source; and on the other, it could wipe an entire city off the face of the Earth. Take the story of He Jiankui, the Chinese scientist who was able to edit the human genome. That story, too, is essentially one of nanotechnology, as gene editing occurs at the same scale. On the one hand, it’s a huge scientific breakthrough; on the other, it’s a crucial issue of ethics: do humans have the right to control what is part of nature?

Our AI overlords

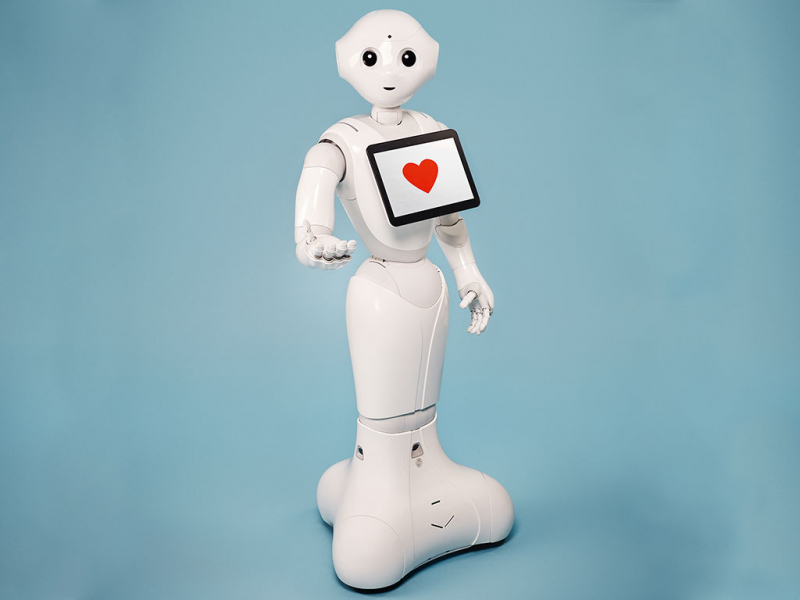

Andrey Filchenkov believes that all our fears of robots stem from a simple misunderstanding of AI and a phobia of all things unknown.

“Artificial intelligence is a term that no one can define. When people talk about an AI that would conquer the world, they imagine something like a human, but an artificial one. The real thing is not even close. Today, AI always stands for machine learning – software capable of solving specific, quite niche, and precisely outlined tasks. And they are very far from the complex tasks, often difficult to even formulate, which us humans deal with regularly,” explains Andrey Filchenkov.

Simply put, robots won’t take over the world or exterminate humanity, at least in the near future – unless that is their creators’ intention.

To conquer something, one needs motivation. It’s not just that nobody gives robots these objectives; humanity is simply very far from even being able to grant robots the ability to set their own goals, not even to mention the mechanisms of motivation. In humans, motivation is controlled by hormones. Robots don’t have a physiology, and giving them something akin to hormones is not only pointless, but also impossible. Robots can’t want – and that’s their big issue, as they can only understand commands and work towards an objective. Yes, the objectives can be difficult: today, you can ask a robot to suggest a movie or vacuum the house; some day, you’ll be able to ask them to write your thesis.

One commonly brought up example of the danger and potential animosity of AI is the story of Tay, Microsoft’s AI bot, which became a staunch racist and antifeminist in just a single day. But it should be noted that Tay simply generated tweets without truly understanding them. There wasn’t anything in its “mind” – it simply put words together. The recent story about Facebook bots inventing their own language is also far from an example of self-aware AI. This is an error of attribution: we perceive the bots as sentient agents making their own decisions. In truth, they had a specific goal: to solve communicative tasks in the simplest way possible. In essence, they didn’t even need a language, and it could be said they didn’t have one, only a sequence of symbols.

On that note, humanity has come up with a great number of tests of “intelligence” for AI. The first and most well-known is the Turing test. It’s been criticized and challenged plenty of times, but the most interesting critique so far is John Searle’s “chinese room experiment” from 1980. Today, dozens of new criteria have come to replace the Turing test: one, for instance, says that an AI should be able to not only understand how a coffee machine works by reading the manual, but also to purposefully brew itself a cup. Or that it should be able to enroll in a university and successfully graduate.

The biggest issue is that nobody really knows what consciousness or intelligence are, or how they work. This topic is an inexhaustible source of topics for philosophy theses. Meanwhile, the Turing test has seemingly been passed by a chat bot pretending to be a 13-year-old Ukrainian boy; but this case has provoked countless debates and queries, and no consensus has been reached by the scientific community.