TAU Tracker: a user-friendly VR without gamepads and controllers

The problem with modern gamepads is that they aren’t really suitable for working with VR objects. It takes a lot of time to learn to use them, and the result isn’t particularly rewarding as you can’t achieve full immersion in the game. To solve this problem, the TAU Tracker startup developed a new motion tracking technology. They are currently working on an interface that allows for interaction with 3D objects with special gloves. Using these gloves, you can control 3D objects with just your hands, which you’ll see through the helmet screen.

What makes the TAU Tracker’s product truly unique is that it is based on the company’s own method of obtaining objects’ location. According to the company’s representatives, the sensors are placed on both a user’s body and the surrounding objects, and the system uses the information gathered by these sensors for locating the objects. After that, this data is transmitted to the visualization system.

The developers note that the technology doesn’t require cameras, doesn’t have blind spots or build-up errors, and is very user-friendly, thus facilitating players’ immersion in the game. The company has already presented the prototype of the device for two hands, while in 2019, it plans to develop the whole-body version.

Collaboration with ITMO University

Last year, TAU Tracker presented its development at several major tech festivals and exhibitions, including Science Fest in St. Petersburg. That was where the startup team and the company’s director Iliya Kotov got acquainted with ITMO University researchers, says Andrey Karsakov, a senior lecturer at ITMO’s Institute of Design & Urban Studies. That’s how this fruitful collaboration started. Back then, TAU Tracker already had a prototype of its device. What they lacked was the opportunity to properly present their achievement to potential customers. ITMO University representatives, in their turn, had never worked with motion capture gloves before and were excited about this chance.

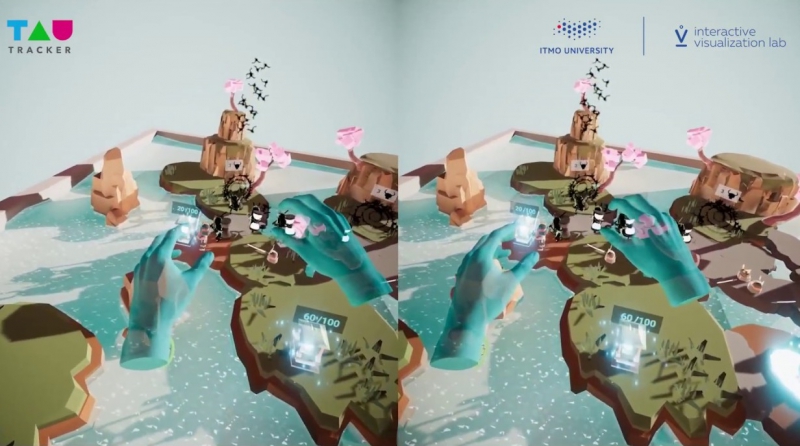

“When thinking about how to present the technology to the audience, we decided that the main task was to demonstrate that the gloves can track all the movements you make with your hands, that is, you can use certain gestures to interact with virtual objects. Eventually, we came up with a very logical idea: there was a game called Black and White created by Peter Molyneux. In this game, you actually played god: using your computer mouse, you could move characters who lived on an island, throw stones, and so on. We replaced the mouse with our gloves and that’s what we got: now you can throw stones with your bare hands. This is very fast and visual. That’s how we came up with our ‘god simulator’ game,” shares Andrey Karsakov.

Shadow Samurai

Then, the island and its inhabitants transformed into fortresses with knights, which eventually turned into samurai.

The plot revolves around an island packed with Yin energy altars, which are chased after by dark samurai who come through the portals. The task is to protect the energy and destroy the portals.

“To start playing, a user puts on the gloves and a VR helmet. What is special about this game is that it can recognize players’ gestures. For example, players have to use their hands to pick up and arrange their fighters in the most advantageous positions to ensure that the altars are protected in the best way possible. But if you’re not gentle enough when placing your virtual soldiers, you risk crushing them, and this is detected via the technology’s gesture identifier. Another example of using gestures in the game is that when a player gives their team a thumbs up, it makes light samurai warriors happy and gives their health a boost. To close a portal, you have to catch the souls of the vanquished dark samurai with your hands and throw them into the portal,” explains Alexander Khoroshavin, one of the main developers of the game and the person responsible for the project’s game design and art direction.

The majority of difficulties the game’s developers faced was caused by the lack of ready-made, universal solutions for working with gestures. So they themselves had to build a system that would allow to reconstruct the hand, with all of its complex elements and their natural movement, from the dots the technology was capable of recognizing, and then work with this hand’s gestures.

“We worked with a technology that was quite ‘raw’ in the sense that it’s still on the active development stage, and that impacted our approach to development. But in the end, Alexander Gutritz, a second-year student of our Master’s program, designed a system that, using six dots, whatever the gesture tracker device they’re coming from, can reconstruct the biomechanics of the whole hand. We built on that development to create a system which allows to record and customize gestures for interacting with the game environment. This means that now, you can write a set of gestures into the game engine, ascribing them to specific actions,” says Andrey Karsakov. “What we managed to achieve by that is that we created a pretty much universal system which works equally well with TAU, Leap Motion, and, in the future, with SDK Magic Leap, which is also capable of gesture recognition. Perhaps, we’ll even launch this set of tools for all of the developer community to use.”

Demonstrating the strong points of the technology, a demo level of the game was already presented to the community and the wider public at the world’s largest exhibition of consumer electronics CES, which was recently held in Las Vegas. But users can’t yet experience the game as it was envisaged by its developers, as TAU Tracker is still being developed, notes Andrey Karsakov. The team is currently mooting the prospects for expanding the game, with the possible inclusion of other technologies and platforms. The game could be developed into two formats. The first one implies that the users interact with the game environment with their hands, as per the initial plan; the second one, that the users control the characters via controllers and play in a mobile VR as an example. But the latter might be launched as an exclusive addition to the TAU Tracker gloves set.

The future of the technology

In near future, the creators of TAU Tracker intend to use the technology in their collaborations with the developers of b2b solutions rather than pushing it for the consumer market. That way, TAU Tracker could be integrated into training simulators, which would allow for the safe and effective training of staff who has to work in complex situations with lots of health risks, for example as part of large-scale manufacturing or surgical operations.

The company also notes that the technology can be applied in the developing of solutions for post-stroke and trauma recovery, as it’s capable of monitoring a patient’s fine motor skills when performing various recovery exercises, as well as of tracking the development of a prosthetic appliance’s neural network.

Andrey Karsakov agrees that as of now, VR is more relevant to large industry players that regular consumers.

“Even at CES, it was clear that the near-future of VR lies not in the consumer market but in the b2b sector, in the format of specialized apps for enterprises,” he points out. “The closest TAU Tracker could get to the consumer are VR park booths, which saw active development in the past couple of years. The CES conference allowed us to establish useful contacts with specialists working in this field, so we’ll work on that one. In general, though, there are lots and lots of ways this solution could be developed. For one, it has a big potential in medicine, where it’s crucial for the technology to convey the subtle mechanics of hands, as well as to ensure high-quality tracking of movements, in surgeons’ training as an example.”

You have the opportunity to experience this technology and play Shadow Samurai at ITMO’s Open Science event. Held on February 8, it will present state-of-the-art developments by ITMO students and researchers.