Evolutionary algorithms

Evolutionary algorithms are metaheuristic search methods often used for solving complex optimization tasks that cannot be solved with other methods. They are based on metaphors deriving from biological evolution: as in nature, in evolutionary algorithms populations made up of a multitude of species develop over the course of several generations, subject to the laws of natural selection based on the principle of the survival of the fittest as formulated by Charles Darwin.

Their applications

Evolutionary algorithms are used to solve a wide spectrum of problems, including the creation of schedules, layout tasks, synthesis and optimization of programs, construction of complex-shaped objects and many more.

Key terminology

Suppose you have a task for which you can find a multitude of solutions. Each solution corresponds to a certain value of an objective function that needs to be optimized (for example, maximized or minimized). The algorithm can calculate this function, choose the best solutions, create new ones on the basis of the latter and repeat this process until a satisfactory solution has been found.

If we were to describe this method with definitions from Charles Darwin’s On the Origin of Species, then a solution to a problem is a “species”, a set of solutions is a “population”, an objective function is a “fitness function” (which determines how well a species adapts to its environment). In addition to these, an evolutionary algorithm also necessitates a “mutation operator” that matches with an introduction of random mutations into the genome of a species. Often an algorithm also includes a “crossing operator” that combines the individual qualities of several parental animals as found in their descendants, whilst “natural selection” is represented by various “selection operators”. You can read more about evolutionary algorithms in this and this ITMO.NEWS articles.

We have talked with Maksim Buzdalov, head of ITMO’s Evolutionary Computation laboratory, and Arina Buzdalova, research associate at the Information Technologies and Programming Faculty, to learn about the key research in the field of evolutionary computation, the GECCO conference and ITMO scientists’ work presented at the latter.

ITMO University scientists are regular participants of the GECCO conference. What makes it different from others?

Maksim: On the whole, there are three significant scientific events organized in the field of evolutionary computation. Apart from GECCO, these are IEEE Congress on Evolutionary Computation (CEC) and Parallel Problem Solving from Nature.

What makes the GECCO conference special is its very high quality of reviewing and its scale. One of its features is the strict adherence to the report schedule at the sections. Why is this important? Quite a lot of specialists attend the conference not by sections, but by the reports most interesting to them. At GECCO, this is normal practice because such a clear timing gives you enough time to listen to an interesting report, ask questions and catch the next presentation even if it’s given in a different room. Here, it’s considered normal when you don’t sit through one section from the beginning to end but rather pick and choose what you like.

Arina: This also increases motivation: people attend exactly the sections and reports they’re interested in. But apart from this, GECCO gives you an opportunity to build interesting collaborations. Someone could be engaged in one field, for example, the application of evolutionary computation in games, someone could be engaged in theory, and suddenly they decide to collaborate, do something together. It's really great. Such intercrossing of different research directions allows for the creation of an environment in which interesting projects are born.

Among the speakers of this year’s conference was Ingo Rechenberg, a pioneer in the field of evolutionary computation and artificial evolution. He invented a set of optimization methods known as evolutionary strategies (the term was originally proposed in German, Evolutionsstrategie). Another interesting fact about Ingo Rechenberg is the acrobat spider Cebrennus rechenbergi, which lives in the Namib Desert, is named after him. Inspired by the spider’s movement pattern which is comprised by a series of continuous flips, Prof. Rechenberg created a robot in its likeness. The scientist plans to use this development for the exploration of Mars. Interestingly, Rechenberg is still an active scientist and travels a lot.

Which topical trends did the conference participants focus on this year? And, speaking more broadly, what are the main current trends in your field?

Arina: I would single out two different directions towards which the field is developing. Firstly, speaking about the conference, I’ve noticed that there have been more reports related to the use of evolutionary computation in deep learning and reinforcement learning, in deep reinforcement learning in particular. I think that this is a general trend at the current stage of technology development, so this couldn’t have passed us by. Evolutionary computation is also used to generate the structure of neural networks.

Maksim: For example, the first plenary report concluded that both deep learning and different branches of genetic programming can be considered as points on a single continuum of similar methodologies. And one of the progressive ways of thinking is to not distinguish between them.

Of course, on the one hand we understand that there are large amounts of data, that there are different neural network architectures, everyone looks at Google with admiration… On the other hand, there is genetic programming in which there is usually less data but new structures capable of glueing software together and consequently growing them into something bigger are invented almost on a yearly basis. Thus, we can see a certain shift towards not trying to distance ourselves from machine learning, deep reinforcement learning in particular, and certain areas related to evolutionary topics.

Deep neural networks specialists have unexpectedly emerged as pioneers in this field: they follow what is being created in genetic programming, try to take something from these developments for their own research, but at the same time also share something of their own.

Arina: The second direction for the field’s development is constituted by a somewhat internal trend. The fact is that, taking machine learning for an example, there is a well-developed tradition of testing the algorithms and comparing them with each other, there are standard datasets, and so on. In evolutionary computing, especially in regard to discrete optimization, because of certain historical, objective reasons, the situation is much worse. Discrete optimization tasks are all very different from one another, which makes it difficult to create some kind of a standardized dataset. Nevertheless, it is very important for specialists to have standardized means of testing and comparing algorithms. For this reason, the scientific community is moving towards the development of a common strategy. This has also been the subject of many sections at this year’s conference.

Going back to machine learning, which interesting results can this field’s rapprochement with evolutionary computation lead to?

Maksim: We could dwell on this for a long time, and I think that I’m only able to give one part of the answer. I have an idea that everyone is so into researching big data nowadays because it is more accessible in some places and easier to train on, and that’s why the amount of networks created also turns out to be quite large.

On the other hand, there are breakthroughs happening in the field of deep learning from time to time that allow us to do the same but with significantly less effort (in less time or with less data). Some of these developments are not even related to cases where someone, say, comes up with a radically different neural network architecture, but occur because the very formulation of the research question changes. And it seems to me that in some cases, what will be observed at the junction of genetic programming and deep networks will look something like this. That is, we’ll see the proposition of either novel, never-seen-before architectures or previously unprecedented ways of answering existing questions.

Arina: In my opinion, when we combine evolutionary algorithms and neural networks, we are moving closer towards the idea of evolving robots that was first expressed by Alan Turing in the 1950s. It centers around a supposition that can create a kind of “robot-child” which will go on to learn something much as its human counterpart, but most likely won’t be ideal right off the bat so it will take several generations to perfect it, just as it happens in nature. Perhaps we are nearing the moment when neural networks can, speaking figuratively and without going into details, evolve by themselves.

This year ITMO University scientists presented a record amount of reports, 11 in total. What is the reason for this?

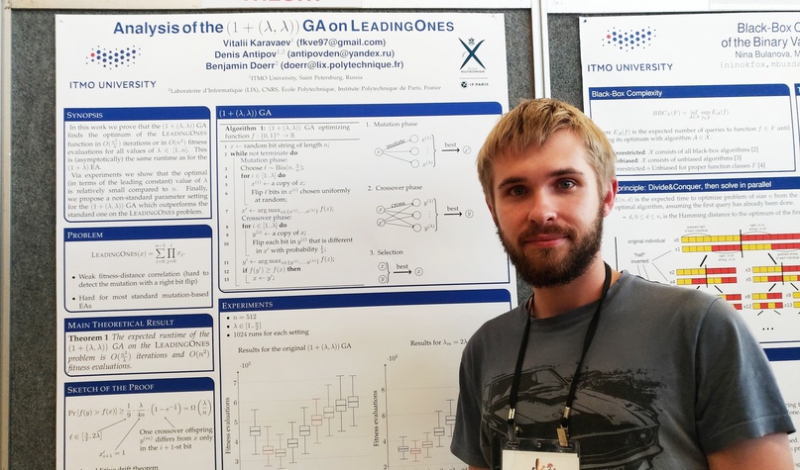

Arina: Some time ago, we began cooperating with professors Carola and Benjamin Doerr of Sorbonne University and École Polytechnique. And I see such a surge in publication activity as a consequence of this joint research. For a long time already, we and Prof. Benjamin Doerr have been engaged in the implementation of a joint PhD program. Participating in this program, among others, is our colleague Denis Antipov, who has already started to take on his own students. One of them, Vitaly Karavaev, has been awarded a prize for the best report in the student section at this year’s GECCO conference. That’s why Vitaly can be seen as our scientific grandson in a way.

Apart from this, as part of our collaboration with Carola Doerr we have formulated a large number of thesis topics. This year, as many as five students have successfully defended their works under our and Dr. Carola Doerr’s joint guidance. What is more, we have managed to prepare a paper for an international conference, which is rare with Bachelor’s students. Thus, this collaboration we have been developing in recent years have borne fruit and paved the way for such a successful performance by the young ITMO scientists at the latest GECCO conference.

For example, working in collaboration with Carola Doerr, another of our students, Ivan Ignashov, has contributed to the development of a library, or a special platform, for the testing of evolutionary algorithms, first and foremost those solving combinatorial optimization tasks. Ivan has created a way for displaying information that allows to track the interconnection between the quality of the solutions obtained, the probability of what we would obtain as a result, and the time needed for obtaining these results.

To what fields the research your group has presented at this year’s GECCA conference is connected?

Maksim: Despite the fact that we are a small group, we pursue a variety of different topics.

One of the reports we presented at the conference is a research project conducted in collaboration with Vladimir Ulyantsev and Alexander Semyonov of the Matrosov Institute for System Dynamics and Control Theory of the Siberian Branch of the Russian Academy of Sciences. Building on Alexander Semyonov’s ideas, we proposed a far-reaching application of evolutionary algorithms in cryptanalysis. What makes it so interesting? In our case, the evolutionary algorithm is used as one of the elements of a complex, composite set of methods. My contribution to this project consisted in finding ways for the application in this set of methods of mathematical statistics in order to decrease the time needed for the algorithm to work. Of course, it turned out that the main goal of this work was the opportunity to tell people that evolutionary algorithms can and should be applied in cryptanalysis. And we presented one of the most viable and serious ways to achieve that. You can learn more about this research project here; it was supported by the Russian Science Foundation grant №18-71-00150.

Another scientific field that is very important for our laboratory comes in the form of data structures. For the past two years, I have been involved in the development of solvers for different tasks that pertain to the computational geometry problems arising in multicriteria evolutionary algorithms. Last year, I participated in the conference with the definition of a problem to which a lot of problems occurring in the multi-criteria evolutionary population algorithms can be reduced. Together with the definition of this problem, I also proposed not only an effective algorithm for its solution but also a very high-quality implementation of the latter, which can be used by all interested researchers on the out of the box basis.

This year, I moved from the category of the tasks where all the input data is known to solving problems where the input data is expanded upon with time. It turned out that the situation is much less rosy here. I didn’t manage to create a working solver, but what I did create was a number of checks for the concept, which represent a range of scenarios which demonstrate that this task is much less homogenous than the previous ones, which makes it much more interesting. (This research is available here; this work was supported by the Russian Science Foundation grant №17-71-20178.)

Where exactly can this be used?

Maksim: As a general rule, multi-criteria evolutionary algorithms tend to be harder than those where only one value needs to be optimized. Today, most of these algorithms operate in multidimensional spaces where each criterion has its own coordinate axis. Thus, the fitness value of each solution corresponds to a number of points in a multidimensional space with which different operations are carried out. But due to the fact that the authors of such algorithms and specialists in the field of computational geometry rarely cross paths with each other, the situation here is close to that of the reinventing of the wheel, and a very badly operating one at that. In other words, what we see are very heavy, unwieldy algorithms emerging from scratch.

In light of my competition-participating past (Maksim Buzdalov is a world champion in programming – Ed. note), I try to combat that. My current approaches lead to a situation where it’s possible to generalize the algorithmic material available, write one procedure based on the results of such a generalization, and make it as efficient as possible so that everyone else who is too lazy to think about how it all works could just use it. Simply speaking, you could say that what we get is a contribution to all the multi-criteria evolutionary algorithms at the same time. Such research roughly constitutes one half of all the work done under my guidance as part of the Russian Science Foundation grant №17-71-20178.

As you have noted, the majority of your work belongs to the realm of fundamental research, the evolutionary algorithms theory. If we were to speak about applied research, what kind of work do you pursue in this direction?

Arina: We deal with the evolutionary algorithms theory, but just theory pure and simple: we work towards the narrowing of the gap between the theory and its practical application. And it’s not only our laboratory that pursues this objective: this is a very topical and important task for the whole evolutionary computation community at large. There are special grants that are dedicated to this topic of which we are also participants. And our other works can also be characterized as research aimed at bridging the gap between theory and practice.

For example, our students Anna Rodionova and Kirill Antonov have prepared a report together with Carola Doerr and I demonstrating that it’s not always such a good idea to blindly opt for asymptotic, or, in other words, theoretical estimates.

Suppose that there is an algorithm which is proven to be optimal for a certain kind of problem, meaning that asymptotically it works with no less effectiveness than all other possible algorithms. But the key word here is asymptotically. This means that it works better in cases where certain parameters are large enough, for example when we have a fairly large population. But in practice, a large population, especially if we want to parallelize the process of calculating the fitness of a species, signifies that we must have a lot of processors, several thousand of them.

It’s more feasible to use dozens, or even hundreds of processors, but this amounts to a different situation in which this method is no longer optimal. What is more, the optimal methods for cases where we have a generation of about a dozen species are different from the optimal methods for cases with a generation with hundreds of them. Unfortunately, theoretical results at the current level of research do not always allow us to see this. The young scientists working as part of our group have shown all this in their work, which raises a very important question about the limits of applicability of the theory in practical conditions.

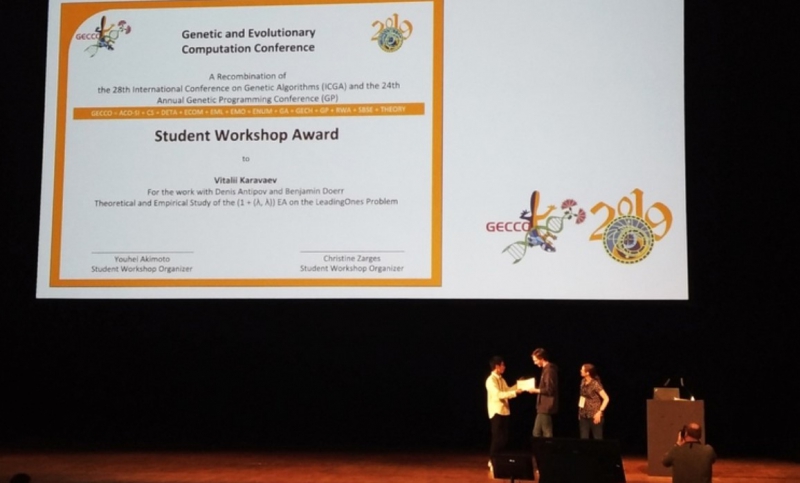

You have also mentioned that the work by the Bachelor’s graduate Vitaly Karavaev has been recognized as the best in the student section. What, in your opinion, has brought about this victory?

Maksim: An important trend of the last ten years is that some results are remarkable not by themselves, but by the means with which they were obtained. That is, if in the process of working on a specific algorithm or a specific task a researcher came up with some universal technique that can be used by others to achieve their own goals, this is appreciated much more than just a result obtained using standard methods. For example, in not one but numerous evaluation cases, the question of “How innovative were the techniques used?” comes up as a hint to reviewers. This is an important evaluation criterion and it’s a big plus for a work to exhibit innovative research methods. Vitaly’s work offers this big advantage.

To prove the operating time of the algorithm under study, the authors of the work needed to significantly improve on one of the theorems by way of making it more refined. In its original form, it centered on mathematical expectations of the running time of a certain process; it also considered different options in order to assess how much the value of a random variable can deviate from its mathematical expectation. In the process of obtaining the main declared result of his work, Vitaly, together with his academic advisors, was able to significantly clarify this deviation, which forms a crucial part of the study.

In general, the presentation at the GECCO 2019 student section constitutes only a preliminary version of this research. An extended article on this topic has also been accepted at the Foundations of Genetic Algorithms (FOGA) conference, which will be held in late August. This is a very prestigious and influential conference that takes place every two years, at which, as a rule, very few reports are presented (totalling to as little as 13 this year). And each of these reports, including Vitaly’s, proves to be, without any exaggeration, a big hit among the scientific community.