Six hundred gigabytes of webpages

The impressive capabilities of the GPT-3 language model are no secret – it even had its article published in the Guardian. The neural network can contemplate philosophy, come up with poems and song lyrics, write detective stories, medieval chivalric romance pieces, and scholarly articles, newspaper columns, or press-releases. As its dataset it utilizes almost six hundred gigabytes of text and web pages, including the entirety of English-language Wikipedia, works of prose and poetry (both classical and contemporary), online archives of scientific articles, media publications, GitHub code strings, travel guides and even culinary recipes.

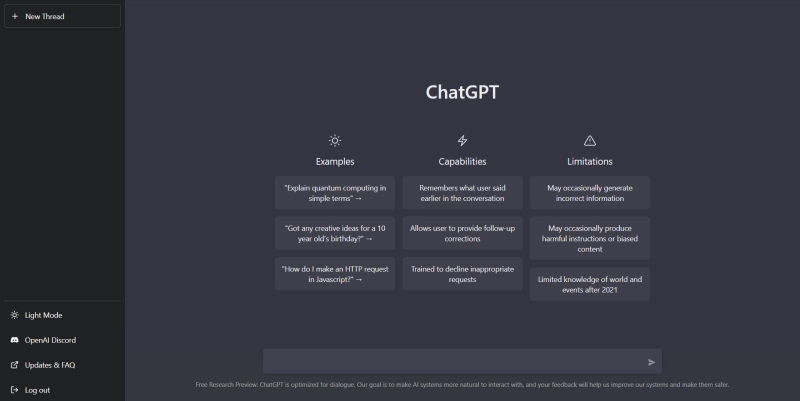

In late November, OpenAI, the developer behind GPT-3, published an open-access version of a chatbot modeled on the neural network called ChatGPT. The primary feature of this version is that it is capable of “understanding” the context behind the communication, so that you can have a full-fledged conversation with it just like you would with a real person, including seeking advice and asking questions, requesting it to search for information or even review your code. The developers are running tests by making it write technical documentation and check pieces of code for bugs.

ChatGPT homepage. Credit: Nið ricsað // Wikimedia Commons // CC BY-CA 4.0

An essay that’s on point

But no one has yet checked whether an essay fully written by GPT-3 can obtain a passing grade in a real exam. Two students from top Russian universities, Maxim Dremov of HSE and Andrey Getmanov of ITMO, decided to run such a test. Maxim studies at the School of Philological Studies, while Andrey is a part of ITMO University’s Big Data and Machine Learning Master’s program. Together, they have a long history of experimenting with neural networks and pushing the boundaries of different language models for various literary tasks. For example, one time they tried to make GPT-3 solve a real contest-level problem – it had to write an essay about some historical event from the perspective of a person who was there at the time. The neural network did not just generate a text, but did it in a style that was appropriate for the time period.

From this emerged the idea to figure out whether an AI can pass the Unified State Exam in the Russian language all on its own. The topic of the essay, “Why can scientific advances that increase people’s quality of life be harmful for humanity?” was picked from the official list that was used in the 2022 exam. The task was to write no less than 350 words with a detailed thesis that is supported by three arguments, one of which must be sourced from a work of fiction, and one must be a real-life example.

The end product was a selection of three essays: the first referenced Brave New World by Aldous Huxley, the second – The Road by Cormac McCarthy and Mary Shelley’s Frankenstein, the third – 1984 by George Orwell. The results were shown to Mikhail Pavlovets, an associate professor at HSE’s School of Philological Studies and head of the Project Laboratory for Intellectual Competitions in Humanities. Coincidentally, Mikhail is engaged in the development of tasks for the Unified State Exam and contests for school students – he confirmed that the AI’s text complies with all the criteria in full and would earn a passing grade in the exam. He also showed the essay to some of his colleagues who pointed out several linguistic errors, but likewise conceded that it fits the Unified State Exam’s criteria.

Credit: photogenica.ru

Fakes and pseudoscientific nonsense

Naturally, whenever AI development reaches a new stage, a legitimate question arises: will text processors one day replace journalists, writers, or even scientists? As per Andrey Getmanov, it is simply not possible. Well, at least until the main issue of generated texts is solved, that being their utter fakeness:

“The issue with generating fake statements, which seem like the truth but are not, is unresolved as of yet. This was most apparent with the release of the demo for Galactica neural network, created specifically for writing scientific articles. It attracted less hype than ChatGPT, but was also noticeable. As it turns out, it could make claims that were pure pseudoscientific nonsense with complete confidence, thus deceiving its user,” comments Mr. Getmanov.

Galactica came out two weeks earlier than ChatGPT. Its algorithms were taught using a set of more than 48 million articles, textbooks, lecture notes, scientific papers, and encyclopedias. Furthermore, its users could add their own articles and materials into the data set. In the end, the developers had to restrict access to it, as trolls very quickly taught the network to spout nonsense.

The neural network art critic

Nevertheless, such text generators still have their uses – especially seeing how much useful and relevant information is included in their datasets. For instance, a neural network can be of assistance when selecting literature, analyzing entire scientific fields or philosophical concepts. Andrey Getmanov confesses that GPT-3 helps him to better understand modern art:

“Neural networks, provided you are competent and capable of googling, can be very useful tools. For instance, you can use it to immerse yourself into a yet unknown area of research. Ask it for literature recommendations, and then read it. Once, I requested ChatGPT to explain certain pieces of contemporary technological art to me. I typed in a short description of each piece and then made a query to elaborate on what each one contains based on various philosophical concepts. Having said that, I was already familiar with these artworks so I had some understanding of their essence. But GPT actually had a fresh outlook, showcasing the aspects that I did not even think were there. And the descriptions I gave were quite succinct, all just a single paragraph, more technical than conceptual. It is surprising that a non-human can use these descriptions to make statements, since their meaning is buried quite deep.”

Andrey Getmanov. Photo courtesy of the subject

Gaps in education

So it seems that writing jobs are not going to go extinct. But the fact that an AI is capable of following a template to pass an exam raises some questions in terms of educational standards – wrote Mikhail Pavlovets, one of the experiment’s participants, in his column in the Vesti Obrazovaniya online blog (the aforementioned essay by the AI can also be read there, in Russian). Andrey Getmanov is of the same opinion:

“When I was in school and had to pass the Unified State Exam six years ago, these essays seemed like formulaic nonsense to me. You write them and then instantly forget what you wrote as soon as they are submitted. I think that such pointless things have no place in either school or higher education,” said he.