Computational imaging and lensless microscopy

The term “computational imaging” describes the creation of images using computational methods through unfocused diffraction patterns. This modern approach is used in the new generation of various optical devices such as telescopes and microscopes.

A lensless computational microscope does not have any lenses or microlenses to form images on an image sensor. Instead, the study specimen is lit up (by laser or diode) and the object’s light diffraction pattern is registered. Using special algorithms, the end image is calculated using these very patterns. Lensless microscopes, just like holographic ones, have several advantages. In a regular optical microscope, the seen image is a highly magnified photographic image. But, if the object of study is transparent (like, for instance, many living cells), photographic images can’t be captured unless one uses special coloring agents. The method of lensless computational microscopy is similar to holography and works as such: it does not simply process the photographic image, but records the full information on the wave field, an important property of which is phase delay of the light wave.

The light wave front that crosses a transparent object or reflects off its surfaces can slow down or be delayed at various points, depending on the object’s optical properties. The phase delay data captured by such microscopes helps visualize transparent objects or to measure three-dimensional landscapes.

In lensless microscopes, the calculations are a key element since they not only form the optical images, but can also improve the properties of an optical signal captured by a light sensor matrix (a camera). What this means is that, using various mathematical methods and algorithms, a better image quality can be achieved using the same equipment.

Credit: osapublishing.org

Field of view

The field of view is an important property of any microscopic image. In regular cases, an increase in resolution usually improves detail, but reduces the physical scope of the object’s observable area. In “traditional” microscopy, the microlens and the tube lens focus the light field from a small area of an object into a larger area of the matrix, thus enlarging the image. But the matrix size is fixed and cannot be changed after it has been manufactured. Computational methods allow us to move beyond these limitations and increase the field of view.

Such methods are based on capturing a sequence of several differing diffraction patterns using a camera. The differences can be produced by various factors; the research in question employed specialized filters, phase masks introduced into the optical arrangement using a special device called a spatial light modulator. After processing the diffraction patterns achieved with these filters, researchers managed to artificially increase the field of view and, therefore, increase the resolution.

Nikolay Petrov and Igor Shevkunov

“By using various masks, we increase the amount of useful data that can be extracted using special algorithms. In this study, we used it to find information between neighboring pixels that couldn’t be detected by a sensor. This is done using a mathematical apparatus which represents signals as spares sets. In layman’s terms, this is how it works: let’s say you have a sheet of graph paper with 8 by 8 squares. Optical fields, by their own nature, are not discrete, but you can only register them using such a discrete grid mesh – pixels. Most of the existing methods of computational imaging tend to preserve a sampling interval when reconstructing the object. So if you registered your signal on an 8x8 grid, the end image will be digitized at the same rate. But if the signal meets several criteria of sparseness, then the registered discrete signal of 8x8 pixels can be used to restore the object with a more detailed grid: 16 by 16 or even 32 by 32. The resolution, in turn, is increased by 2 or 4 times. Our computational algorithm also extrapolates the signal outside of the logging area, which, going back to our example, means that our 8x8 grid is now surrounded by additional pixels, further increasing the field of view and, therefore, the resolution of the image. Through computational methods, we amplify an image’s resolution without changing the matrix or other technical parts of the device. This way we save on the funds we’d otherwise have to spend on improving the equipment to achieve the exact same results as we did with computational algorithms,” – comments researcher Nikolay Petrov, head of ITMO University’s Laboratory of Digital and Display Holography.

Computational super-resolution

In microscopy, the spatial resolution is limited by the diffraction limit. That means that it’s impossible to increase an object’s detail in an image into infinity with just the use of increasingly stronger microlenses. Discretization issues aside, it can be said that spatial resolution is limited by two parameters: the field of wave and wavelength of the type of emission being used. The shorter the wavelength and the bigger the field of view, the better the spatial resolution is. Since the algorithms in this research artificially increase the field of view, their spatial resolution improves, too.

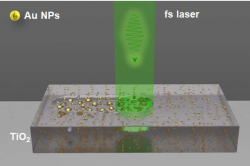

Schematics of an optical system. Credit: osapublishing.org

“Regarding the development of this area and simplification of the optical system, it is necessary to remove the spatial light modulator from the system and decrease the number of masks/filters. One of the more obvious areas of development is the use of a single mask with consecutive indexing. This will significantly cheapen the computational lensless microscope we have developed, since the spatial light modulator is its most expensive part,” – adds Igor Shevkunov, researcher at Laboratory of Digital and Display Holography and a participant of Tampere University of Technology’s Fellowship Program.

Improvement of lensless computational microscopic devices will be yet another step forward to improving the quality of research in biology, chemistry, medicine and other sciences.

Reference: Vladimir Katkovnik, Igor Shevkunov, Nikolay V. Petrov, and Karen Egiazarian, Computational super-resolution phase retrieval from multiple phase-coded diffraction patterns: simulation study and experiments, 2017, Optica.