First of all, could you elaborate on bibliometrics’ significance for modern science? What are its main objectives and what is it able to tell us?

Bibliometrics provides science with a lot of information. The first and the most important function that bibliometrics acquired half a century ago is the possibility to find the most relevant articles in your field of research. Plus, you can not only find articles written by other authors, but also to see which are the most cited and therefore the most influential. That’s especially important nowadays, in the age of exponential growth in academia. Another objective of bibliometrics is to help you understand what is being published and cited a lot. For us researchers, it can be a useful tool to find new research contacts.

Moreover, our data can help you to choose a journal for publishing your research in. Successful researchers care about the amount of people who have heard about their work. The bigger the journal's audience, the higher are the chances that someone will notice your paper and cite it.

In the last decades it has become especially relevant that bibliometrics helps us manage research and check its impact. It’s possible to evaluate the impact of a certain author, organization or even a whole country. We can also compare universities: university rankings use bibliometrics a lot, by the way. Finally, bibliometrics help us to highlight the most important trends in a certain field and to see which topics are the most relevant and groundbreaking.

Could you give us an example of a bibliometrics research in a certain field of science?

We conducted a research of publishing activity among AI researchers of the last ten years. It allows you to see how bibliometrics work.

In the course of the research, we’d noticed that the first peak of publishing activity on the topic of AI occurred in 2006, and the second one in 2017. After 2017 there has been a decline, even a stagnation, however, due to it being a new developing field, we expect a new, higher peak at some point.

We had selected the most cited publications and tried to visualize the connections between the publication and its keywords by means of applications like VOSviewer. That way, we managed to find the most groundbreaking topics that kept scientists busy in the last couple of years: convolutional neural networks, multicriteria decision making and the Dempster– Shafer theory.

Now it would be appropriate to get back to the very definition of a highly cited article. A highly cited article is a paper that was published in the last ten years and is among 1% of the most cited articles in its field. The year of publishing is very important here, because papers that were published in 2017 couldn’t have possibly gotten as many citations as papers from 2012, so you can’t compare them by the bare citation factors.

You’ve said that, thanks to bibliometrics research of that kind, the impact of a certain author, organization or even a whole country can be evaluated. What about Russian AI researchers?

Our scientists don’t publish a lot of works in the AI field in comparison with other fields. We are in 24th place worldwide, which is much lower than in physics. They also don’t get cited a lot: an average Russian paper in the field of AI has half the worldwide average normalized citation. Although it should be noted that ITMO Unviersity’s index is quite close to the latter.

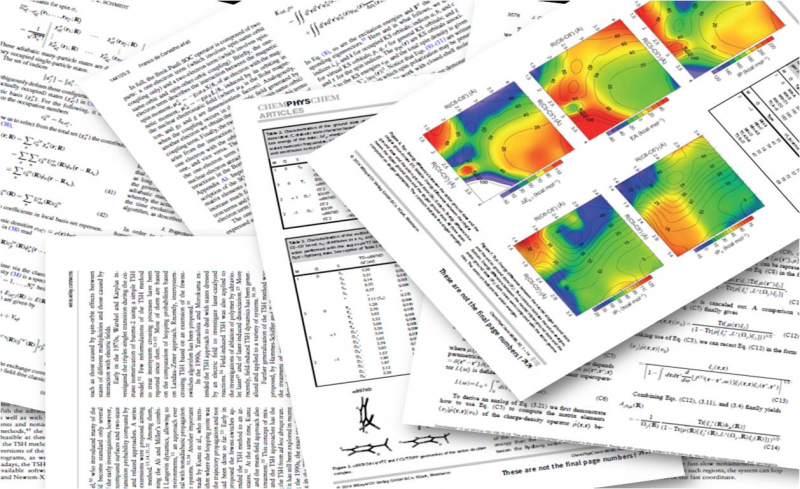

This raises some questions: why is it that way? Is our research worse? The answer is no. It has a lot to do with the fact that our researchers get published in low impact journals for the most part. All journals are divided into four equal groups, or quartiles, according to the most common citation factor – the impact factor. In the first quartile there are 25% of the journals that are most cited, in the forth one – 25% of the least cited ones. Although, we have to understand that even fourth quartile journals are good, they’d been checked by our editorial commission, which is considered to be the strictest among the international bibliometrics databases. In these journals all papers must be reviewed, it’s just that the audience is not that big: leading universities don’t have a subscription for them and therefore there are less citations.

Then why do our researchers publish there so often?

There’s a lot of reasons. On the one hand, I think it’s a relic of Soviet science. There are lots of Russian journals in which authors choose to get published because they’re used to it and they think it’s easier and faster to do here, in Russia. Maybe the lack of experience in communication with big journals is also one of the reasons. While we’re talking about AI, I’m not considering the lack of English language skills, because I’m pretty sure specialists in this field are good at it, however, it can be a problem for researchers in other fields.

Another factor is the impact factors that are considered when a paper is being evaluated. They are mostly about being published a lot, so it’s easier to get several publications in a familiar journal that is not very strict review-wise, even though it has a lower impact factor, than to get one publication in Nature, for example.

However, getting published in lesser-known journals is not only our problem. In the 19th century there was a great American physicist, mathematician and mechanic Josiah Willard Gibbs. His research in thermodynamics predetermined physics of the 20th century. For example, Max Planck’s work in quantum mechanics that was awarded by the Nobel Prize in 1918 was based on his research. The problem is, Planck got an opportunity to read Gibbs’ main paper On the Equilibrium of Heterogeneous Substances that was published in 1875-1878 only in 1892, so he wasted a lot of time to discover thermodynamic principles that had already been formulated 14-17 years ago.

That happened because Gibbs was quite humble and published his paper in the Transactions of the Connecticut Academy of Arts and Sciences journal that was managed by his librarian father-in-law. It wasn’t too popular even by Connecticut standards.

What should we do about it?

We should try to get published in the first or the second quartile journals more. Not in the ones that we are used to, but in the ones that have a higher impact factor. Any WoS subscriber can search by topic in a particular field and check any journal’s quartile right in the search results.

We don’t have any illusions: it’s true that it’s much harder to get a publication in high impact journals, especially if you’re a young researcher, but if your current paper is at least as good as the last one, we advise you to try and get it published in a journal with higher impact factor than the last time.

This method, if applied countrywide, might lead to a decrease in publication activity, but it will improve the image, citation amount and participation of our scientists in the global researching process. If you’re in the first quartile journal, then everyone wants to be friends with you, and you get invited to the best conferences or to give a lecture at the universities that are connected with your field of research.

It’s worth checking out open access journals. They don’t charge libraries for subscription, so they can be read by a bigger amount of people. Though in that case, the author has to pay for paper processing.

In the “he who pays the piper, calls the tune” situation a conflict of interests often occurs. All journals of this kind can be divided into legitimate ones and “predatory” ones. The ones of the second kind are like parasites, they don’t review papers, but basically sell the possibility of publication for researchers who need one just for the record. Here we can offer universities something to think about. The thing is, a publication in the first or the second quartile open access journal gives you not only the biggest chance of being cited, but also guarantees that it not only had never been detected as being a “predator”, but also won’t ever even consider it, because its reputation won’t allow it to get back on the horse in such case.

As I see it, it should work the following way: a researcher confirms that their paper was accepted by the journal, and that their research is not supported by any grant, which makes it obligatory to publish the results in an open access database, and then the university supports the researcher by paying their article processing charge.

You’ve mentioned a normalized citation impact factor before. What is it? Is it somehow connected to the Hirsch index?

No, they aren’t related to each other. The thing is, many researchers believe that h-index has a lot of disadvantages, so it’s not the best option by far. For example, a scientist that gets a lot of references can have a lower Hirsch index than their much less well-reputed colleague. Let’s compare two cases: one author has ten publications that were cited ten or more times, say, each article exactly ten times. Their h-index is ten. Another scientist has eight papers with eight and more references. However, three of their papers were referred to more than a 1,000 times, which is basically a Nobel Prize level. Still, their Hirsch index is going to be eight.

There’s another problem: we can’t compare researchers that work in different fields. Mathematicians don’t really like h-index, because usually the amount of citations is lower when it comes to their subject. Historians refer to each other even less. However, it doesn’t tell us anything about mathematicians and historians as researchers. It’s just that they have other traditions when it comes to citation, not the same ones as in genetics, oncology, molecular biology: they cite a lot. If we compare h-indexes of a mathematician, a geneticist, and a historian, the geneticist will probably win by far, even if they aren’t as prominent in the research community as the other two.

This problem can be partially solved by the normalized citation impact factor: it’s a fraction with the amount of references in the denominator and average amount of references to other articles of that sort, year and field in the numerator. It’s very important to evaluate articles of the same kind, because in some fields a review article gets cited four times as often as an original research.

These rules don’t apply very well to humanities, e.g. to art studies. There are a lot of negative citations when someone criticizes their opponent, but the latter just gets another reference. Plus, research papers in such fields get published less in general, so WoS don’t even calculate impact factor for them. It’s the right thing to do, because when national evaluation systems are trying to evaluate humanities the same way as physics, it only leads to a decrease in evaluation’s quality.

Overall, bibliometrics almost never works just by itself: without the context and experts’ interpretation bare numbers can bring more harm than good. So, for us discussions on whether it is the expertise or the numbers that are the main criteria don’t make sense. Both are important and should complete each other.

Another one of the important publication activity factors is a patenting activity factor. What can you say about the way it’s being checked?

There’s a certain difference between publication and patenting activity data. For the latter there are several factors that help us evaluate innovative aspects of the universities.

The first factor is the amount of patents. However, the expertise strictness should be taken into account. For example, at Rospatent there’s a patent champion who shall remain nameless, and they have more than 30,000 patent records, which means that if they’re younger than 90, they’ve been applying for a patent and getting accepted every single day since they were 18. We’ve seen the titles of their patents and trust me, it’s not the kind of innovations that, say, ITMO University would be proud of. However, worldwide patent leaders are, quite predictably, companies like Samsung, Microsoft and Apple.

The second factor is a relation between the amount of applications and the amount of patents. Again, expertise level should be considered. This factor shows the quality of innovation, but most importantly, big companies are trying to increase accepted applications’ amount because it’s about money, time and effort.

Finally, there’s another factor that can be called a quadrilateral patenting. I think it’s similar to the amount of publications in the best scientific journals. There are four most prominent patent institutions, one in the USA, one in Europe, one in China, and one in Japan. If your invention is accepted by all of these organizations, it shows that this technology is competitive and potentially demanded. Sadly, it’s not a common thing among Russian universities, only four of them have at least one invention with such patents. This probably determines the fact that our universities aren’t included in innovative universities rankings.

Translated by Kseniia Tereshchenko