Artificial intelligence (AI) is superior to the human mind in many ways. Since the middle of the 20th century, machines have been able to calculate faster than mathematicians. In 1997, a computer defeated grandmaster Garry Kasparov in a game of chess. In 2016, two inventors – Jay Flatland and Paul Rose – have published a video in which you can see their robot solve a Rubik’s cube in under one second. Back then, the best result achieved by a person was almost five seconds.

Today, computers are even able to write lyrics and compose music. In early 2020, a court ruled that a text written by AI has to be protected by copyright. Machines are almost perfect when it comes to logical tasks, mathematical analysis, data mining, and compilation of new content on its basis.

“Big neural networks are taught on enormously large amounts of texts, images, or melodies, depending on the task so that connections inside this neural network would detect certain patterns,” explains Ilya Surov, senior research associate at ITMO University’s National Center for Cognitive Research. “By using them, the machine can compile new texts, images, or, for example, predict which word a person is going to type next in the search bar. However, it doesn’t work in all fields. Despite great computing abilities, computers can’t cope with complex tasks, the solution of which requires drawing non-trivial analogies and making a decision that isn’t similar to previously studied samples.”

From simple to complex

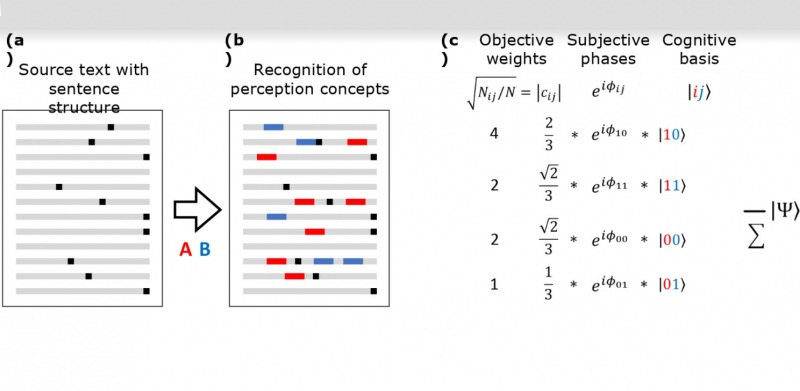

The research team from ITMO University together with their colleagues from Sweden, Italy, and France has created a model that analyzes text in a new way, not by comparing it with previously studied samples like a typical neural network does. “Our idea was to construct the meaning of a written text the way a human would, to imitate human comprehension of text,” says Ilya Surov, lead author of the study.

At first, researchers tried to teach the machine to look for connections between two terms mentioned in a text in the way people do.

“As a rule, in the world of science, to solve a complex task, you need to first solve a simpler one related to the same phenomenon,” says Ilya Surov. “That’s why we focused on the most basic act of text perception. In our case, it implies the detection of connections between two different words. For example, “sun” and “tree, “promotion” and “website”.”

Quantum neurophysiology of perception

In order to solve this task, the scientists abandoned regular neural networks and referred to quantum theory. For their model, they imitated the basic act of text perception based on the excitation of neurons in the human brain. Just like an electromagnetic wave, this excitation has an amplitude and a phase. These indicators are hard to express in traditional binary code using bits but it can be done with their quantum analogs – qubits.

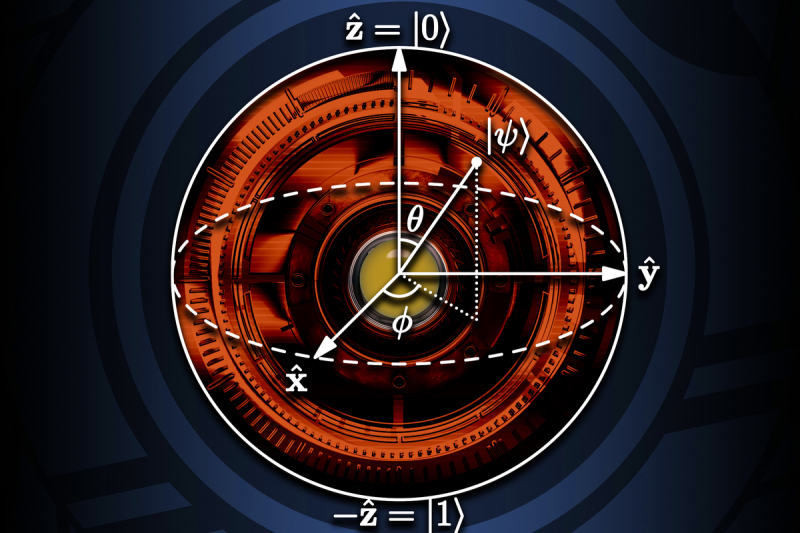

“Imagine a line with 0 and 1 written on its ends. That’s a bit,” explains Ilya Surov. “It only has two states: either 0 or 1. Now, imagine a sphere in which this line is a diameter that connects two poles: 1 stands for northern and 0 stands for southern. That’s a qubit. It can have many more states than just two. Its state can correspond to any point on the surface of this sphere. Width corresponds to amplitude and length – to the phase of neural excitation.”

Quantum algorithms in regular computing

Even though qubits are used in prototypes for quantum computing systems, their model can be created even on a regular computer, just like any laptop can be used for the creation of a 3D model for Earth or a football. You just need to enter several numbers to set up a state for this qubit model.

“Thanks to this, we can now use tools developed in quantum theory,” continues Ilya Surov. “For example, there’s a theory of quantum entanglement that doesn’t have parallels in regular computer science. It describes properties of the paired system that aren’t limited by the properties of its parts. For example, the meaning of the phrase “website promotion” doesn’t equal the sum of meanings of the words “website” and “promotion.” In our model, such a correlation connects two neural excitations in the process of text perception, and the entanglement stands for the semantic connection between two words. We take a text, create a two-qubit state of perception for a pair of words, and calculate the quantum entanglement for this state. The result describes the semantic connection between words in the text.”

A step towards human-like intelligence

Such a system is able to understand the meaning of natural language and detect connections that are based not only on word usage frequency.

“The novelty of our model lies in the fact that when using it, you can receive a variety of possible solutions instead of just one particular result,” notes Ilya Surov. “This variety is determined by two key features of meaning. The first one is about objective statistics of word usage frequency that can be taken into account by any neural network. The second one is the human aspect in the creation of meaning – this is about things you can’t determine objectively. Any kind of text has a variety of possible interpretations and each specific meaning depends on the reader. One person can understand the same text in this way, and another one – in a different way. Our algorithm allows us to take that into consideration.”

According to Ilya Surov, the introduction of such categories is one of the first steps on the way to human-like artificial intelligence that will appear only when it will be able to detect subjective components of meaning. Modeling of human intelligence based on the principles of quantum theory opens new horizons for building new kinds of algorithms for data processing.

This study is published in the Scientific Reports journal.

Reference: Ilya A. Surov, E. Semenenko, A. V. Platonov, I. A. Bessmertny, F. Galofaro, Z. Toffano, A. Yu. Khrennikov & A. P. Alodjants. Quantum semantics of text perception. Scientific Reports, 2021/10.1038/s41598-021-83490-9