You are currently working on several projects for assist people with disabilities. Tell us a bit more about them – what tasks do they solve?

My main project is OpovestIItel (portmanteau of Russian words for "announcer" and "AI" – Ed.). It’s an AI-based mobile app that analyzes sounds in the user’s environment, notifying them about potential dangerous situations: alarms, sirens, sounds of disasters, etc. The app’s target audience are deaf and hard-of-hearing individuals, but the app will also be useful for people without hearing disabilities: for instance, those who work in headphones and can't hear the surrounding sounds.

In order to detect danger, the app first removes any unnecessary noise using filters and then processeses it via an AI model that detects and classifies sounds of danger. Finally, a notification is sent to the user’s phone as a text, sound, and a unique vibration for each specific danger signal.

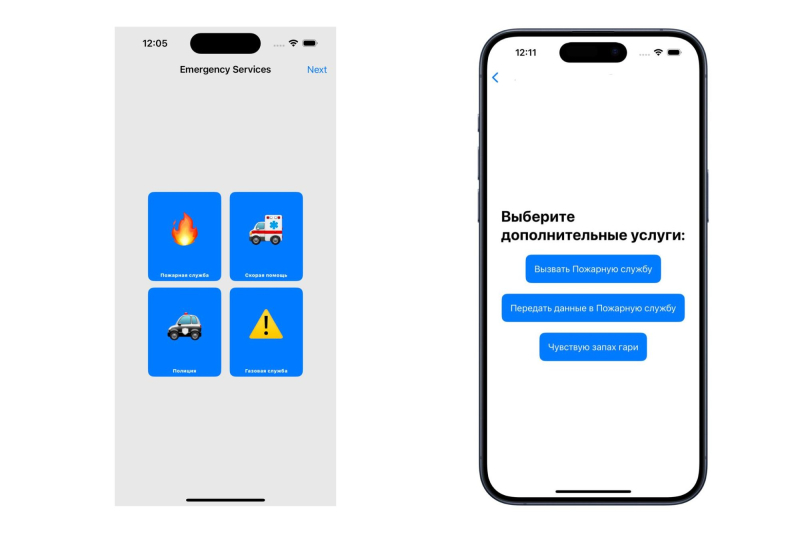

The next project is Quick-Alert. It is meant to help people who cannot reach out to emergency services on their own because of a disability (i.e., a hearing impairment). At first, I wanted to create a product that would be able to compile a written request and voice it out to an emergency service using a speech synthesizer. Later, I realized that when a person isn’t feeling well, they don't have the time to type something up. That’s why I decided to develop a simpler solution that would be able to make the call in less than a minute.

The mobile app is based on context commands, with every element voiced over by a screen reader. Visually, it’s very simple: just a menu of buttons, each of them responsible for a particular service – the police, the fire service, emergency gas service, and ambulance. Once the user has chosen the service, they proceed to the second menu, where they need to choose a request; these steps repeat until the request is formulated with maximal precision. If the user wants to call an ambulance, they describe the circumstances: if the pain is in the chest, head, stomach, etc. Next, they proceed to choosing the details on a specific topic in another context menu: high blood pressure, internal bleeding, etc. Thanks to all these parameters, users can tap exactly what happened in mere seconds. Finally, the request is followed by the person’s location (they can also indicate a precise address) and their Gosuslugi (a state-run digital platform that provides various services – Ed.) identificator, to prevent false alarms. The complete request is sent to the respective emergency service, where it is decoded by a specialist who then sends a response team.

And the third project is Keypoint, a typing practice tool for the visually impaired. My team and I started developing it fairly recently, in October. The app teaches users to type quickly and with less errors. Even if the person cannot see, with the help of our app they will be able to memorize the positioning of keys and learn to type confidently without any restrictions. In the app, there are different modes: you can type words and sentences or random letters, symbols, and key combinations. Built into the service is a speech synthesizer that reads out the task (what to type) and the result (what was typed and whether it is correct).

OpovestIItel app. Image courtesy of the subject

What results have you managed to produce?

OpovestIItel is already in use at the Resource Educational and Methodological Center of the Northwestern Federal District of Cherepovets State University – there, anyone can download it. And it has been done by 600 users. Moreover, the app has a team of 20 testers. Currently, the service is used not only by people with hearing loss but also students without disabilities who work in headphones or are stationed at labs with sound insulation and restricted access – they won’t be notified if something dangerous were to happen.

The second app is an MVP, it has been tested by several people. Technically, it’s nearly ready for use, but we have yet to establish agreements with emergency services.

Keypoint is also an MVP: we are half-way towards making it, but there are currently several tasks yet to solve. At this stage, we are working on the graphic interface. My fellow students with visual impairments participated in the first round of testing and offered their recommendations.

How did you start developing projects in this field?

Just like the target audiences of my apps, I, too, have some disabilities – I have ocular albinism, which means that my eyesight is bad. When I was a child, I experienced some difficulties and noticed how unfair it was. For instance, already in school I noticed that some don’t treat people with disabilities that well. Because of my eyesight, I couldn’t see what was written on the blackboard in class, so I took pictures of it and zoomed in to make it out. I got used to it and now I don’t need anything apart from my regular devices.

However, there are many socially unprotected people with disabilities in Russia, who are unprotected not only in terms of state support programs. To maintain a good quality of life, they require everyday technologies and digital solutions.

Read also:

This Is a Sign: Translating Popular Science into Sign Language

Did anyone help you with your projects?

I’m a solo developer, but the support center for people with disabilities of the Resource Educational and Methodological Center of the Northwestern Federal District helped me a great deal with my first concept. They specifically gave me the data I needed to develop my app, as well a platform to test and present it, and offered some methodological recommendations for my second project.

As for the third one, it’s a joint project that I’m working on with my fellow students Aleksey Lyubimov and Daniil Zakharov, who are well familiar with such apps and hence know how to make them better. Another member of our team is Anastasiya Gorbacheva, a curator of the project Я делаю (I Do – Ed.) and a student of Peter the Great St. Petersburg Polytechnic University.

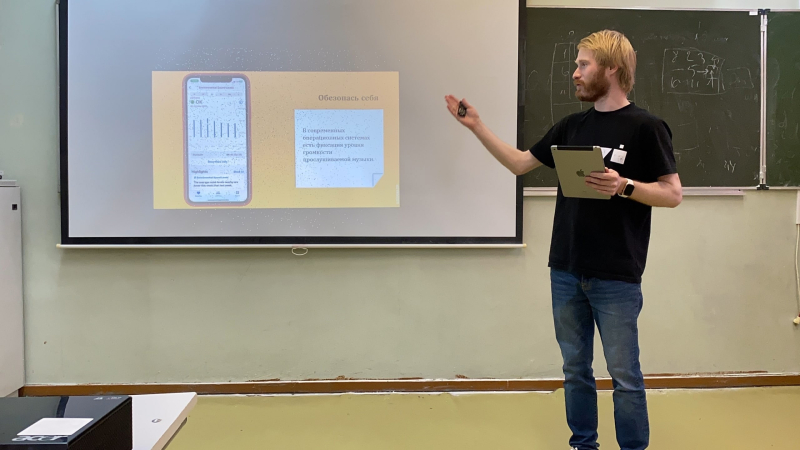

Dmitry presenting OpovestIItel. Photo courtesy of the subject

You’ve studied at Cherepovets State University but opted for ITMO University for your Master’s. Why?

My friends from ITMO once sent me a live presentation of the program Programming for the Visually Impaired, which piqued my curiosity. Such specializations, in my opinion, move inclusion forward. I applied to this program and a couple of others at ITMO and the Higher School of Economics in Moscow, as well. In the end, I realized that it’ll be simpler to implement social initiatives within this specialization due to its socially-oriented nature.

Read also:

Russia’s First Master’s in Programming for the Visually Impaired Launches at ITMO

No Limits: Visually Impaired ITMO Graduate on Working as DevOps Engineer and Launching His Startup

What does studying at the university offer you? Do you find any support for your ideas here?

The university is interested in and, more significantly, willing to help me with my third project. That being said, I wish more people were aware of my ideas and products; if so, finding developers would be a lot easier. ITMO is home to a range of different specialists, including cloud mobile technology majors and those who study Swift and Kotlin programming languages. It’d be nice if we could pool our resources to complete the iOS app and develop an Android version.

What are you going to do next?

I'm now working on OpovestIItel's second edition. For starters, I updated the app's filters, since I feel that an app responsible for one's life cannot make mistakes.

Another project in progress is a service that will assist a person in escaping from, say, a house fire. If approved, we will create a full-fledged program that will not only warn of but also help users get out of a life-threatening situation.

We're also trying to reach out to specialized bodies and services within the Ministry of Emergency Situations in order to standardize emergency warnings. Since institutions in different cities of our large country tend to have different alerts, it only makes sense to bring them in a uniform format, so that AI could identify the signals more efficiently.

Finally, we're working on adding time-frequency tags to all signals. This way, we’ll do well with traditional classification algorithms and thus reduce battery usage and optimize it for the use on portable devices: not the powerful Apple Watch, but rather fitness trackers like Mi Band.

In the future, we hope to make our software available to all users. Meanwhile, I’m waiting for Apple to allow users to download apps from outside the App Store. Currently, I don’t have the opportunity to make it available there, so the app can only be installed via a signed public certificate.

First and foremost, Quick-Alert requires a web service and server to accept messages and deliver them to various centers based on the user's location. We also intend to get a hold of emergency services to complete context commands in our app and make them as clear as possible for their staff. Furthermore, we’re considering going for the offline mode, too, so that users could send their requests via SMS when they don’t have an internet connection.

As for Keypoint, we’d like to shortly spread it to educational institutions that have visually impaired students. We also want to make the app more inclusive, as well as analyze input statistics and generate teaching recommendations.

The main screen (left) and one of the context menus (right) of the app QuickAlert. Creedit: QuickAlert app

What else, except additional team members, do you require to make it all happen?

At the moment, I fund all of my projects by myself, so apart from human resources I also need financial aid. In the near future, I hope to secure state funding to carry on with my ideas.

By the way, I also began another project this summer; it is an app that can identify medications using NFC tags. Here is how it works: we program the tag to have the name of a drug and create a tracker, so that when a person scans the tag, the service reads out the name of the medication and the user can use the “Take a Medication” feature. The app monitors medicine usage and alerts you when it's time to take a pill. I am planning to connect it with medical services, allowing for more precise drug and dose selection. The service will be of use for people with visual impairments or memory problems.

Most of all, I’d love to build a single ecosystem that combines the best of OpovestIItel, Quick-Alert, and the NFC tracker. Users will be able to choose the functions that they need, be it calling an ambulance, scanning a medication, or activating surrounding sounds monitoring. There could be other features, too, but I can’t do it alone.

Do you have a dream?

I had no idea where it would lead when I initially started. I didn't even realize that these projects would be in demand; I just assumed they would be interesting projects that would make it to GitHub and assist students with their lab assignments.

My current aspiration is to complete my projects, combine them into a single service, and see the first downloads in app stores. Then, I will know that I did the right thing and helped someone. It's fantastic to see your product benefiting others in addition to working efficiently. It’s probably one of the finest feelings for a person who has been working on it day and night.