Why mix AI and robotics

Robotics is an interdisciplinary field that brings together mechanics, electronics, and control systems. In order to plan a robot’s route and manage its movements, experts need to have a good command of mathematics and algorithms. Without correctly functioning optimization algorithms, a robot won’t be able to plan its movement trajectory and steps, especially if there are obstacles in its way.

A control algorithm is based on an accurate mathematical model of a robot, which describes its state, geometry, and dynamics. Using this model, the algorithm calculates the robot’s movements and interactions with the environment. In other words, the more precise the mathematical model is, the more efficient the actual robot will be.

To build an accurate mathematical model, it is essential to consider key geometric parameters (such as mass, inertia, friction, drive power, torque, as well as the performance metrics of drives and sensors). For a robot isolated from its environment, creating an accurate model is straightforward. However, the task becomes fundamentally more complex when the robot is required to physically interact with objects in its environment, especially when this environment is unknown.

“We can, for example, build an accurate model of a manipulator robot welding a car. It has a fixed base and predetermined environment – we know what will happen to it. That’s why we can make accurate models of the robot itself and its surroundings, calculate the welding machine’s trajectory, and make the robot simply follow a precalculated trajectory. However, if we need a mobile robot, one that will walk or move on wheels in a city, or a kitchen manipulator robot, the conventional paradigm would require a model of its surroundings. The case is, though, that we cannot create an accurate model for an undetermined, a priori unknown, and constantly changing environment,” says Prof. Ivan Borisov, a researcher at ITMO’s International Laboratory of Biomechatronics and Energy-Efficient Robotics (BE2R Lab).

AI technologies can be used to address the challenges of designing robots as systems. For this purpose, researchers turn to gradient and global optimization algorithms, as well as neural network approaches, including training on large datasets and reinforcement learning. Thanks to these methods, it’s possible to automate the design process (by delegating the filtering of solutions to algorithms), solve the task of motion policy synthesis, and enable robots to perceive their environment.

How AI is facilitating robot design

One robotics task that AI is already helping to solve is the design of entire robots or their individual parts, such as mechanisms or drives.

Previously, the quality of a design depended solely on the skills of its creator: if the same task was given to several engineers, they would all solve it differently. As a result, there would be various designs, each with hardly guaranteed optimality.

These days, this problem is being addressed through the use of numerical and generative design. The main goal is to automate the design process and help specialists find optimal robot designs more quickly by exploring all possible solutions. Numerical design can be based on both optimization algorithms and neural network approaches, such as reinforcement learning or supervised learning. It helps identify the optimal parameters to enhance a robot’s performance, while generative design helps create mechanical structures and optimize them.

Reinforcement learning was proven effective in the industry. For example, it was used by Disney Research in collaboration with ETH Zurich to merge the animation of a droid from Star Wars and a physical model of a bipedal robot.

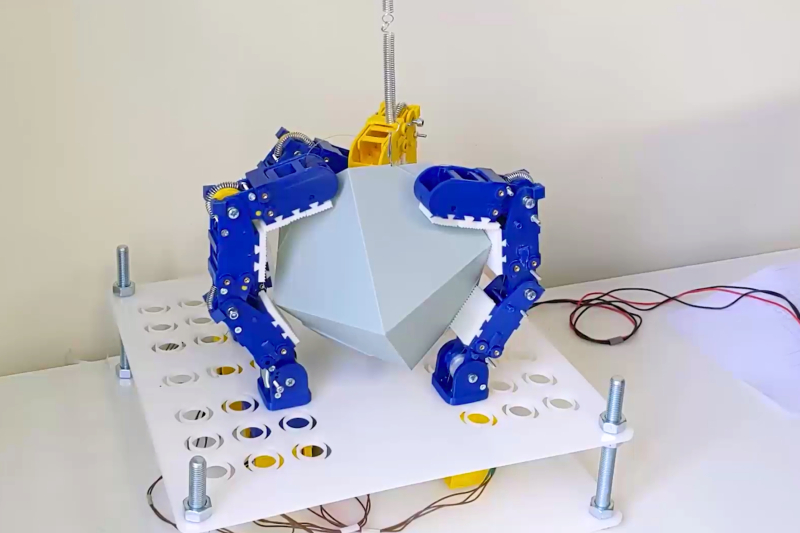

Researchers from the BE2R Lab have created the open-source repository Rostok; with it, it’s possible to use generative design to create single-drive adaptive grabbing tools. Taking into account the parameters given by an engineer and the robot’s design limitations, the technology balances between them, presenting a greater number of various solutions. This makes the design process quicker (compared to human performance) and mathematically justified. Each solution is tested in a simulator and graded on performance. The higher the grade, the more likely the algorithm is to recommend a particular solution to engineers.

Grabbing robot design. Photo courtesy of ITMO scientists

The researchers used a similar approach to improve their previous design – a mobile jumping robot. Earlier, the robot relied on bearings, making its structure more rigid and less mobile; during a fall, part of the impact energy damaged its solid links. Using global optimization genetic algorithms, the scientists found out that it would be better to use soft links instead of bearings. By absorbing all the energy from a fall, such links protect the robot’s structure and allow for partial recouping of energy from the impact. Thanks to this change, quadrupeds, anthropomorphic robots, and collaborative manipulators can be more energy-efficient, withstand shock loads, and adapt to uneven surfaces.

Both solutions were revealed at the International Conference on Intelligent Robots and Systems (IROS 2024), a major conference that holds the A rank (one of the highest) in the CORE conference ranking.. In 2024, the event was held in Abu Dhabi on October 14-18.

Jumping robot prototype. Photo courtesy of ITMO scientists

AI for robotic vision

Computer vision is a key element in robotic design, as it helps robots build a 3D map of their surroundings and plan routes around obstacles. However, without AI solutions to these tasks are too expensive, time-consuming, and inefficient.

In the 1980s, Prof. Hans Moravec from Carnegie Mellon University explained why vision became one of the hardest “capacities” to acquire for robots. It’s summarized in a paradox subsequently named after him: contrary to popular assumption, higher cognitive processes (such as playing chess or go) require relatively little computational resources and are thus available to robots; at the same time lower-level sensorimotor operations (vision, perception, and body coordination) require immense computational resources.

With AI, it’s possible to overcome Moravec’s paradox. For that, engineers first assemble a dataset (or take an existing one) wherein images are clearly marked by features and categories: for example, some are cats, and some are humans. To map out its surroundings and plan routes, robots use LiDARs, cameras, and other sensory technologies, while trained networks help them “make sense” of the surrounding objects. If a robot encounters something unknown along the way, it might try to classify this object using similar images from its dataset.

AI can also help robots navigate complex environments with mirrors or glass doors. Their surfaces reflect or permit light through, which means that robots can misinterpret obstacles or distances to them.

Sergey Kolyubin with the team of ITMO's Faculty of Control Systems and Robotics. Photo by ITMO.NEWS

Headed by Sergey Kolyubin, researchers from ITMO’s BE2R Lab have developed a 3D mapping system based on deep neural networks and the camera’s ego-motion (its movement relative to surrounding objects). Having tested the system in a space with glass partitions, the researchers concluded that it calculates distance to objects and creates 3D maps of spaces more accurately than other neural network-based solutions with RGB-D cameras that calculate distances to objects and their colors.