Tell us a bit about your company.

Orbi Prime was founded in 2016 and became an ITMO Technopark resident in January 2017. But we’re not limited to Russia: our company is partially based in the US and receives funding from Kazakhstani investors.

Our engineering and development departments are here in Russia, while our CEO is in Silicon Valley, and our primary focus is on the US market. The tech division at Orbi Prime mostly develops software that stitches together panoramic video recorded by the glasses, as well as stabilizes and processes the final product. The product we make is assembled in China, while the hardware was developed in collaboration with a Taiwanese company.

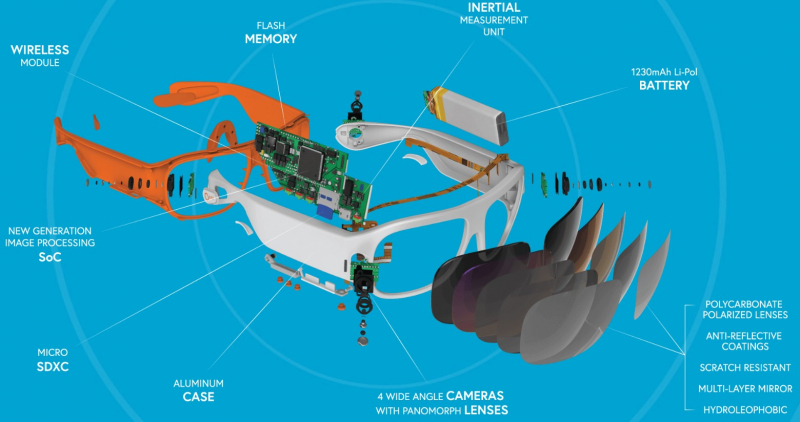

Our first product was a pair of sunglasses with four built-in cameras that record 360-degree videos. It was in development for two years and just a year ago it was ready for mass production. The first batch has already been completed, and we’re currently waiting on a second one.

The second product is also a 360-degree camera, so we’re not straying from our lane. It’s a headset designed to record and, importantly, stream 360-degree video content. We’re currently working on a helmet that would be used by American football players, but the general idea is for a headset that could be used for any kind of sports.

Our focus is on a higher quality of video, with an 8K resolution and 60 frames per second. The goal is to make the kind of video that could be broadcast live right from the stadium.

How was your trip to CES this year? What was your takeaway?

This was our third time at CES. When we first went there, we came as a startup and took part in the CES Innovation Awards in two nominations: Wearable and VR/AR. We won in both, which was great because that allowed us to take part in media day, an event that takes place a day earlier and is only open to journalists. Getting in there can be quite expensive. Our product was on display along with the other nominees; it drew the journalists’ attention and we gave some interviews.

Then, we set up shop in Eureka Park, a special pavilion for startups only. None of the items displayed there are present on the market. It always attracts a great deal of interest from guests, investors, and journalists.

You can only be featured at Eureka Park once, which is why in the subsequent years we showcased our work in the main pavilion alongside other, well-established companies. A small business such as ours usually has a tough time there.

This year, we found ourselves placed far from the main crowd, which we thought was bad until it turned out that this helped filter out the random visitors; those who came to us were the people already familiar with our product, those we can collaborate with and achieve solid results.

Did you sign any deals?

It should be clarified that CES is not a fair; it exists purely as a place where you can demonstrate technologies and engage in networking. Seeing as our headset is designed for livestreaming, the only way to stream video at such a high resolution is to use 5G. Wi-Fi or other data transfer methods are insufficient.

Our product has got 5G right in its name, and this technology is at its peak of popularity right now. So this one word in the name has gotten us the attention of potential partners including mobile providers and manufacturers such as Huawei and Oneplus. They’re all looking for ways in which to use the opportunities offered by this technology. Even now that CES is over, we’re still in touch with these companies.

When do you expect your product to hit the market?

It’s difficult to tell when we’ll be done with development. The issue is less technological – though there are some challenges there, as well – than connections-related, as our main goal is to get in touch directly with the NFL (National Football League). Broadcasting rights to their games are expensive, and any new piece of technology can bring a lot of profit to the networks. But the challenge is that they’re very conservative and reluctant to adopt innovative solutions. Nevertheless, our end goal is to work with them, as this is the most likely way to monetize our invention.

For now, we’ve decided to create a simplified version of the headset using the hardware from our glasses. It already exists, so we’d only need to integrate it, and it’s also cheaper and more compact. That’s not going to work for our original idea, so we’ll still need to make the helmets from scratch. This means we’ll need to gain the support of American football helmet manufacturers, and there’s only three or four of these companies worldwide, all located in the US.

We’d also need them to agree to develop a helmet designed around our hardware, which would require a lot of engineering effort on their part, and this is far from the most hi-tech industry. First, we must convince them that this tech has real potential.

There also needs to be a team that would agree to use these helmets during games, a permission from the NFL, and telecommunication companies willing to broadcast this content. The latter are actually the ones showing most interest, as companies are on the lookout for more immersive technologies. All in all, we’ll need to bring them all together, convince everyone that it’s a great idea, and have them promote it together.

Right now, we’re working on this simplified headset in collaboration with an American startup that manufactures sporting gear. Due to its lower video quality, it won’t be used for broadcasting but rather for training. It’ll let coaches watch the game through players’ eyes in real time and fix misplays, analyze the game, and give commands to players.

After CES was over, we also visited an exhibition on American football, which took place at a coaching congress. There are a lot of school and university teams in the US, and they’re all very intrigued by our invention. That’s how we’ll make our entrance onto the market.

Can you tell us more about the livestreaming feature and the way it’s done?

We’ve developed the software, which is currently in the prototype stage but has already been showcased at CES. It stitches and stabilizes the video in real time. We’re also working on a neural network-based algorithm that would pick the optimal camera angle, basically fulfilling the duties of an operator. It’ll analyze the location of the ball and the players to figure out where most of the action is happening. The resulting content is a regular, non-panoramic video, which means it can then be used for broadcasting. It also analyzes frames and picks the most alluring ones. That’s just one of the ways in which you can use the video produced by these headsets.

But we can also offer an entirely new way of broadcasting sports games: in first-person view, using a VR headset, with the ability to switch between players. That’s quite a fascinating way to watch the game that should prove interesting to the younger generation that no longer watches TV. The experience of being tackled by a 120-kilogram footballer in the first-person POV would be a memorable one, I’m sure you’ll agree.