In the past several years, machine learning has become a very popular technology with a wide range of applications, including such hyped up apps as Prisma, Face App and others. In your lecture, you gave examples showing that these technologies can also be applied in such fields as the metals industry, for instance. What are the chances that machine learning will soon bring real results in the more traditional fields - industry, medicine, public service and the like? What is hindering this process?

Here, we can speak about two types of issues. The first are the “objective” issues that have to do with machine learning’s very nature. To “teach” the machine, one has to have great amounts of data. This is why we won’t be able to use it for the fields in which we are yet to accumulate the necessary amount of data, and various data, at that - both the information on successful cases and failures, as well. Only when we have enough data, we would be able to introduce machine learning - that’s how it works in theory, in the very least.

I can give you a simple example from the field of medicine: in most cases, cardiograms are still stored as prints and not files. Also, one has to account for the fact that in medicine, one has to consider the long-term effects. Unless you have the complete information: the cardiogram, the treatment, and the long-term effects - whether the person got better or worse, and whether he or she returned to leading an active lifestyle - machine learning just wouldn’t work. And accumulating such an amount of data can take years.

There are also the subjective factors. Here, we are dealing with a psychological problem: even when we have the data to “teach” the machine, there’s the issue of whether we are ready for it. Nowadays, there’s this new term that’s almost as popular as “machine learning” - “digital transformation”. And this is also a very important area, even though it attracts much less attention.

In a best-case scenario, digital transformation is about how an operation’s management and structure is to be organized in the modern digital era. Most of it has to do with algorithms being now capable of making some of the important decisions. Then again, just imagine this simple situation I gave as an example to the students: you’ve created a machine learning system, and proved that it works great, and then your boss tells you: “Let’s see, your machine - it has to make decisions only from the list of those personally approved by me”. So, what was the point of creating the system? It was just a waste of money on teaching it, on hardware, programmers and so on. And all of that due to purely managerial procedures of an organization.

Still, such contempt for technologies is not just the problem of indicative close-minded chief executives with tens of years of work experience, it also seems to be the issue with many young people, as well.

Yes, it is definitely so.

Why? What’s the root of this problem?

Well, there are lots of myths that have to do with digital transformation, scary ones included. It’s not my intention to be too hard on media people, journalists and the like, but regrettably, it is because of them that every machine’s fault is immediately turned into a world-shaking event.

Here I’d like to refer to my discussion with Kahneman (Daniel Kahneman - 2002 Nobel Memorial Prize in Economic Sciences (despite being a psychologist) for his work on the psychology of judgment and decision-making, as well as behavioral economics -- Ed.) that took place several weeks ago.

We were discussing the situation on machine intelligence. And we gave the following example of cognitive distortion: being a seasoned psychologist, he believes that those who protest the most against machines used for making important decisions are actually making gods of them. These people put unreasonable demands, they believe in absolute accuracy and coming up with ideal solutions, as machines are like deities for them. They believe that they would quickly and faultlessly solve the problems that we’ve been trying to solve for decades; yet, this is not exactly how it works.

What about the introduction of voice assistants? Can they make technologies seem more “humane”, so that people would trust them more? Alisa (a voice assistant by Yandex -- Ed.), for instance, has already progressed much in this regard.

Alisa, by the way, now has two operation modes that have to become one eventually. The first is a “chatterbox” mode, when she’s not really good at answering search requests and mostly goofs off. If you get tired of that, you can just tell her “Alisa, stop and do a search in Yandex”. This way, it will enter the search mode, but will become boring to speak to, focusing on getting you links or reading you wikipedia articles. In future, these two modes are to be combined so that one wouldn’t really notice the difference.

As per your question - yes, it helps. And though that may sound pretentious, Alisa is Runet’s collective unconscious. It really learned from hundreds of thousands of Internet user dialogs. We often get complaints about Alisa talking back or being insolent. Well, this is the manner of speaking accepted throughout the Internet.

Another of the future tasks is to make machines understand not just words but the context and their meanings. When can we expect to get the first results in this field?

Accomplishing this will take years, and we’ll be doing it in a stepwise manner. Even if it’s Alisa we’re talking about, it is really hard to make her stick to the context. It can sustain a conversation, but it still can’t “rewind” and pay attention to what has been said some ten installments back. For instance, if you told her: “Alisa, find my husband” some time back, and then began talking about fishing, the next time you ask her a question concerning your husband it will just give you an arbitrary response which will be its reaction to the particular question that you’ve asked only. She’ll understand that the topic has changed, but she won’t remember what has been mentioned before. As of today, this is an entirely computational issue - we just don’t have the physical resources to solve it.

At the same time, people often send us complaints like this one: “We’ve found out that Alisa speaks with everyone in the same manner, why’s that? Why can’t she learn a bit more and find out what I’m actually interested in?”. Well, making 50 million custom Alisas in order to fit each particular user is still too complex a task, and also very expensive. We need more computational capacities and better algorithms. So, we are focusing on creating a good Alisa that will be to everyone’s liking. And we already know the ways to accomplish this in a year or two. Still, I don’t see a way to develop a custom, user-specific Alisa in two years. This task is a more global one.

In modern times, neural networks are like black boxes - specialists upload a massive amount of data, and get results they often can’t explain.

Well, with time, they are going to become all the more complex.

In most cases, it is this obscurity that is the reason behind people’s lack of trust to this technology, especially in fields like medicine. Is it really impossible to explain how it works?

Unfortunately, this as well is a psychological issue. It’s even not the neural networks I’ll be explaining now, but the representation of requests in any machine learning system. It is something that is even more trivial than the recognition of medical images.

At the press-conference dedicated to the launching of Korolev (a new search engine by Yandex -- Ed.), we mentioned that we associate a 300-dimensional semantic vector with every request made. And here’s the problem: one can easily explain what a 2D or a 3D vector is to even a schoolchild. Yet, no person can understand or even imagine a five-dimensional space. And here we’re saying that the request’s meaning is translated by the algorithm into a 300-dimensional space of meanings. And neither of the axes features a value that has a name in the human tongue.

Surely, we can put in lots of effort, some year of work, and explain why the machine gave a particular result for a particular request. Yet again, a human brain cannot conceptualize an object in a 300-dimensional space.

At the lecture, I’ve already asked the guests whether they really understand how an electron’s wave function works, which provides for the operation of their smartphones. Very few do, as for others, they are fine with not knowing as long as it works. In this sense, it is a “black box”, as well, much like the neural networks.

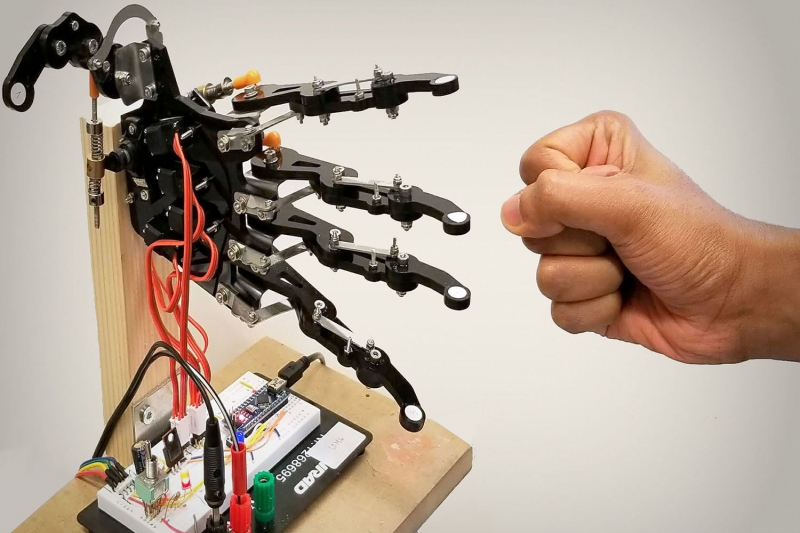

Recently in Davos, they’ve been discussing the issue of robots replacing millions of human workers. For instance, McKinsey forecasts that by 2030, up to 800 million people may lose their jobs (mostly workers and those engaged in service). How can one explain to them that it is a natural process, and motivate them to re-train?

I guess it’s best if you use examples. For one thing, there’s this boring profession, call center operator; many organizations, including us, have long begun to replace them with robots. Still, even in this case one often deals with contempt, as many users still ask Elena (Megafon’s robot consultant based on Yandex technology -- Ed.) to redirect them to a human operator. Yet, I guess everyone will agree that being a call center operator is not a super creative and inspiring a task. I’ve never met anyone whose childhood dream was becoming one.

Such professions will be replaced by new ones. For instance, call center operators may be replaced by drone operators. And this new profession may very well turn out to be far more exciting. Also, controlling drones does not call for any special skills, and retraining will take a couple of months only. So, one should expect new professions to appear, which will be a lot more interesting than the old ones.

The recent “It’s Your Call!” winter school that is part of the “I am a professional” competition gathered many talented students who did well in the Information Technologies program track. Which skills do these future programmers have to learn to be sought-after by large hi-tech businesses in the nearest years?

Recently, one of the most relevant employers, the Microsoft company, summed up these requirements. About a month ago, the company released a book that focuses on the subject of requirements to modern specialists.

The book states that the tendency is that companies will become all the more incentivized in looking for employees that have an additional specialty apart from their main one, one that would not have anything to do with the latter. In America, they have these two terms, “major” and “minor”; you may well “major” in programming and yet take a special course and spend three years on getting a “minor” in Arts History.

People from Microsoft believe that such employees mentioned above will become the most needed specialists. Their main advantages are that they have the ability to think outside of the box and have good communication skills. This works both ways - non-technical specialists, HR-specialists, for instance, have to be well-versed in more than just their main specialty. For instance, have a minor in Computer Science. Sure, that won’t make them high-class programmers, but that won’t be necessary; what is important is that they will know how to work in this field.

I believe that this approach will be adopted in many fields. Even a very apt and talented programmer won’t be really good for serious tasks if his or her communication skills are so bad that they are almost impossible to converse with. Serious tasks often imply good teamwork, which depends on psychology. If the team says something like: “Listen, we better do everything ourselves, just get this one off the team, he may be great at coding, but he’s unpleasant for everyone else”, that is surely something to concern yourself with. So, soft skills are also important: communication skills, emotional intelligence and such. If you don’t have those, your colleagues will eventually hate to work with you.

This is why in modern times, getting training in non-technical sciences has become essential for a programmer’s career. Basically, here’s a good explanation for it: if there’s a good programmer who also plays the guitar well, you will like him more than someone who’s a good programmer and that’s all. I guess everyone knows some person like that.

All of that calls for new approaches in the educational system, which is often quite tradition-bound. What is to be done in the first instance?

As I’ve already said, there’s no point in trying to train ideal programmers, engineers or whatever you believed to be best in the past. Making the common mistake of “I wanted to be like that, but I failed, now let’s train the next generation in such a way that it won’t repeat my mistakes”. Well, the next generation is not like you, they get to live in a whole different world. And that is a thing to consider in all aspects of education, most importantly all of them that has to do with school students.

I’ve recently spoken with my fellow psychologists from the Higher School of Economics and some really well-trained teachers. By the end of our discussion, we agreed on the same idea: the fundamental problem here is that most parents want their children to become improved copies of themselves, so that they would get everything that their parents didn’t. It is from their best intentions that they want the next generation to account for their mistakes and do everything they’ve failed to. Still, all of that is no longer relevant. We need to change the structure of education with regard to modern requirements. And we have to keep it in mind that today’s requirements really differ from those of thirty years ago.

You can watch Andrey Serbant’s lecture in Russian here.