Contents:

- What is an optical computer?

- How does it work?

- Why should it replace the regular computer?

- It’s said that optical computers use up less energy, but just how much?

- If they’re so efficient, why haven’t we started making them yet?

- Isn’t it easier to just keep upgrading conventional computers?

What is an optical computer?

An optical computer is a device (more hypothetical than not as of today) that uses photons in place of singular units of data (bits). In a regular computer, a bit of data is represented by an electron, or, to be precise, its charge: if a certain minimal amount of current passes through a contact element, it counts as “one;” if it is below the threshold, it counts as “zero.” Replace the electron with a photon and the current with the strength of an electromagnetic field, and you get the classic optical computer.

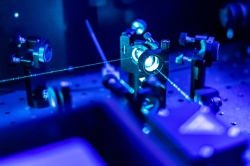

How does it work?

The optical chip’s input port is a set of optical waveguides. Through these waveguides, the device receives optical signals that may be interpreted as zeros and ones (if we’re dealing with a digital optical computer) or as a continuous function of time and position (in that case, the system is referred to as an analog optical chip). Then, the signal is converted by the optical circuit with the help of various optical elements, such as resonators or waveguides. The converted signal is directed to the output port where, once again, it can be represented by an array of zeros and ones or a continuous function.

Why should it replace the regular computer?

There’s a number of fields in which the use of optical computers would be very much justified even now, though there is probably no point in expecting them to replace the electronic device we’re so familiar with in the near future. The benefit is that photons are the perfect vessel for data. Optical signals can carry over fiber over great distances without fading or distortion. Moreover, photons in the optical band possess great throughput capacity, which is the indicator of how many bits of data can be packed into a pulse of a certain length. That’s why we’re already using optic fiber cables to transfer data over great distances. But for now, data that is carried over optic fiber is still processed by electronic devices. Having to convert signals from electronic to optical and back again is a waste of time and energy. It would therefore be great to remove that stage and simply process optical signals with optical methods. There are already systems partially capable of doing that, but we’re still far from creating a full-fledged optical computer.

It’s said that optical computers use up less energy, but just how much?

Regular electronic devices use electrical current, which produces heat. That heat is energy that goes to waste. To make sure that devices don’t overheat, we waste even more energy on cooling them. As a result, the total energy consumption by major data centers in 2018 amounted to 200 terawatt per hour, which is approximately 1% of all energy consumption in the world; and that number is growing fast. Photons have no charge, there is no current, and thus no heat. That’s why at least some computational tasks could be done at practically no cost – at least in terms of energy usage. This concerns the so-called linear operations: adding up, subtracting, differentiation, integrating, and so on. Today’s optical chips are designed specifically for these operations because they can do them quicker and cheaper than electric ones.

If they’re so efficient, why haven’t we started making them yet?

There are still some issues to resolve. First of all, if we need to carry out nonlinear operations (such as logical AND/OR operations, which are common in software), we need to ensure that photons interact with each other. Uncharged particles don’t interact well, and these processes usually require more intensity, which in turn produces heat and increases energy waste. Secondly, photons are always trying to get away somewhere, which makes it difficult to create devices that could store optical data for even the least amount of time (even a microsecond would be nice, but there are almost no such devices). It’s necessary to create interfaces that would connect photonic systems to recording devices (magnetic or electronic ones) and which would be quick and energy-efficient. Another issue is miniaturization. A typical electrical transistor is around 10 nm in size. That makes it possible to pack hundreds of millions of transistors on a one-centimeter chip, and that’s why electronic computers are so efficient. The size of optical elements is determined by the wavelength of photons, which is about one micrometer. Optical transistors, naturally, wouldn’t be as compact as electronic ones, narrowing further their range of applications. Optical chips are widely used to process data within fiber-optic networks and for other niche tasks where their superiority to electric ones is obvious.

Isn’t it easier to just keep upgrading conventional computers?

For the past 20 years everyone’s been trying to “discontinue” Moore’s Law, which states that the number of transistors in circuits doubles every two years, but it still holds. So, for now, making improvements on electronic technologies is still more profitable; but with every year, it’s becoming more difficult to do due to the reduced size of transistors. At a certain scale, electrons begin to behave like waves and “tunnel” through transistor gates, disrupting the entire process. Nevertheless, engineers have spent two decades somehow getting by and making them smaller and more efficient. But at some point we’ll need to stop, That’s when our knowledge of optical computers will come in handy. For now, optical chips will develop as niche solutions for specialized tasks – which, however, does not make them any less important.