Stanford University professor John Ioannidis’s visit to ITMO University attracted a lot of attention. So many people wanted to see and listen to the person who made the world question the quality of medical research that some of them had to sit on the floor or stand in the aisles of the assembly hall in one of ITMO’s buildings. The hosts even set up an additional broadcast for those who couldn’t find a place in the hall.

Professor Ioannidis had promised to cover two key issues in his hour-long lecture: what is wrong with most of biomedical research and how it can be made right.

"It’s my first time in St. Petersburg, but it seems to me I have been here countless times in my imagination,” began the professor. “Naturally, it is an important city for our civilization in many respects – for science, art, literature and poetry.”

After this lyrical greeting he moved on to the main topic of his lecture by saying: “The majority of medical and clinical studies is, unfortunately, useless”. It is a rather disturbing conclusion, as we expect scientists to deliver breakthroughs that would save many people or at least improve the quality of their lives. And yet, as Dr. Ioannidis demonstrated at the very beginning of his presentation, the majority of articles published today do not pass the test of eight simple questions that can help one evaluate their quality.

“First of all, the main issue is that we ourselves have to understand if the problem we are about to solve actually exists or if we’re making it up. Second, context is important: what do we already know, do we need more research or do we already have answers to all the questions? Third, informational benefit. If we conduct one more study, what value will it have? One has to understand that it is not a question of results that we get, be they positive or negative, as even the latter can be hugely beneficial by changing our conclusions. Fourth, pragmatism: will your study have real life effects? Fifth, focus on the patient: are we asking the patients about their concerns or are we making them see things our way? Sixth, is it worth spending money on? Seventh, can we actually conduct this study? It is often that we do not see things as they actually are when we are excited about an idea. And lastly, transparency: is this study open? Can we trust it? Do we see the way information was collected and how it was analyzed?”

Professor Ioannidis stressed the transparency issue again, calling it one of the foremost importance. If a study is not transparent, then how can we trust any other of its aspects? That being said, relying only on high-ranking journals with a high impact factor is not a solution, either. Even in those publications, not every article can provide satisfying answers to the aforementioned questions. Some excellent research articles are being published in small local journals and it is easy to miss them if you only judge by the prestige.

Inconsistency and novelty

Still, even if a paper explores an actual problem, presents a new result and is worth the money that was spent on it, all of this doesn’t make it good, adds John Ioannidis.

“A couple of years ago we did a big project where we analyzed the articles that checked previously published results. In 55% of the new articles, the authors were getting the exact opposite result while using the same data and methods that were used in previous studies. The original paper would claim the treatment to be working and the new one proved that it wasn’t. Who was writing these new articles? Some sly scientists willing to put down those who came before? No, these were the same exact people going through their original data and getting a new result. Why did it happen? Because it is the only way to publish a new article using old data without conducting a new experiment,” says Ioannidis.

All of this leads us to confusion of the general public. People read about new discoveries in papers and on the internet, but these reports constantly contradict each other. It happens because in our times of lacking transparency in science, researchers or other interested parties can choose from millions of studies and methods those that fit best with their hypotheses or interests, leaving the public clueless.

“For example, there is a constant discussion about the harm or benefit of drinking coffee,” explains the professor. “Three of the most popular studies of the last few years cover this topic. They claim that drinking three cups of coffee a day will make you live longer. I am sorry, I did drink a cup after a six-hour tour of the Hermitage, but I seriously doubt it will make me live longer.”

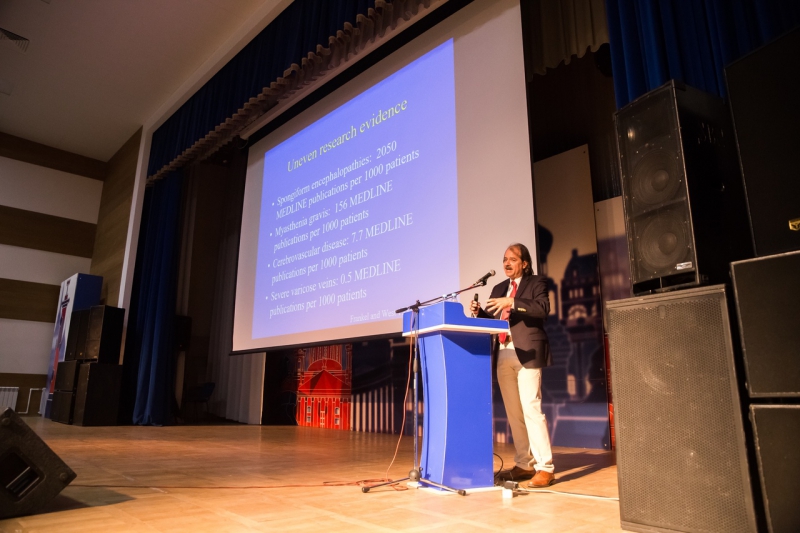

One other issue concerns the frequency of publications, which does not always correspond to the frequency of the illnesses that they study.

“Here are some stats from 30 years ago. Back then, each case of transmissible spongiform encephalopathy (a group of neurodegenerative conditions) corresponded over 2,000 publications. Each patient’s grave was littered with 2,000 papers,” draws an example Ioannidis. “All that when varicose veins had only a 0.5 article per 1,000 patients ratio. Such a serious problem that affects so many people didn’t attract a lot of attention, and it stays that way.”

It is even worse with the geographic distribution of research. As demonstrated in the lecture, around a quarter of the world’s epidemics occur in Equatorial Africa, but only 0.1% of all medical and clinical studies are conducted there.

“And most of the studies that have been carried out there didn’t deal with the problems that concern local people. They covered the problems that concerned locals of California or Massachusetts,” he adds.

Destroying the pyramid

We are all used to perceiving evidence-based medicine as based on a hierarchy of evidence. As he speaks about it, Professor Ioannidis switches to a slide depicting the Great Pyramid of Giza. Just like this Wonder of the World, the pyramid of evidence medicine is viewed as indestructible, perfectly created. Situated on top are critical reviews and just below them are the results of randomized experiments. Even lower, almost on the ground, are expert opinions.

Then, however, the professor demonstrates how this pyramid falls down. The majority of studies are questionable and their data is not reliable. Even a verifiable result will not help a physician who has to make a decision about the treatment or do anything to help a patient. Those who work in healthcare are not always aware of this problem and, even if they are, are not qualified enough to tell a poor-quality publication from a good one.

“Most universities in the world teach their students to do the evaluation, to be experts,” says Ioannidis. “But I think that they have to be teaching students to think, to ask questions. They have to provide this scientific tool set that the students need to analyze the proof they get their hands on.”

And yet, the hardest blow for evidence-based medicine comes from the financial interests of the players on both the pharmacological and medical services markets. Companies test their drugs but they themselves fund these tests. Even if company B is testing products of company A, we find out that at the same time company A is doing the same for company B.

“It’s easier to ask an artist to bring their painting to a gallery and then choose the best picture of the exhibition,” says the professor in dismay.

All of this leads to a conclusion: despite the great victories of evidence-based medicine, it is stuck in the past. Its place is taken by “some kind of reincarnation of expert medicine”.

What can be done?

“I have painted a rather dark picture and yet I don’t see anyone running away in panic,” jokes Ioannidis. “That’s good, hold on, because we have solutions for many of the problems I’ve described above.”

The first and foremost step is accepting the problem. Then we have to combat it by raising transparency, reliability and openness of our studies. It can be done, according to John Ioannidis, with teamwork of the researchers and active collaboration between different groups and institutions. Then, arrangements must be made to ensure that records are kept of all research and that scientists publicly outline their hypotheses, research strategies, and plans. Some journals already allow researchers to publish their plans even before the actual publication of the results.

“This field has witnessed significant changes, because 12 years ago we had no such thing as registration,” explains the Professor. “Now there are 200,000 randomized studies that have undergone the registration procedure. It is far from over and many studies are not being registered now and many more where the published protocol doesn’t make it clear what method is going to be used”.

It’s of no less importance, according to Ioannidis, to make the already published findings available to everyone. In his opinion, the very possibility of independent parties verifying their results disciplines authors of original papers.

“In the last 10 years we’ve seen great progress,” says the professor. “The BMJ and PLOS Medicine journals have made it impossible to publish an article without sharing your data. We have analyzed the papers published there over the last three years and requested data from the authors with half of them saying yes (which is already a great achievement in comparison to what we had in the past). As a result, we have found some mistakes, but they were not critical and didn’t affect the whole picture. Thus, the right environment already improves the quality of results.”

Finally, says Dr. Ioannidis, we have to improve the level of training for specialists, namely their ability to work with statistics and data analysis. It is also crucial to stop racing for the greatest number of publications and pay more attention to their quality, citation rate and their translations to other languages. We have to create a system where it would be beneficial for your status, your prestige and even your finances to do good, quality science.

“Science is the best thing to happen to humanity. I don’t want anyone to walk away thinking science is bad and we have to get rid of it and replace it with something else. We have to find a way to make our research useful, especially those of us who do medicine. It is not easy. We have not lost evidence-based medicine and the studies based on its methodology. The fact that you are all here today is proof of that. I think we can do it [evidence-based medicine] and succeed in it.”