Not so long ago, you gave a lecture on AI and medicine. Could you say a few words about the conference and your presentation?

It was a medical event organized by medical specialists and for medical specialists. The conference was held at ITMO. Luckily, the organizers managed to lower the risks of transmitting COVID-19 during the event, and the conference took place offline.

I was asked to give a talk about medicine and our research in the field of artificial intelligence. This is a rather broad topic, so I decided to focus strictly on what prevents technologies from completely replacing humans in this sphere.

And what are these problems?

While preparing for my speech, I read lots of articles on related topics, and nearly all of them listed problems and challenges that made such a scenario impossible. They all represented different opinions, so it was hard to get facts straight. Nevertheless, I highlighted six key challenges.

The first one has to do with the complex landscape of medical education. If you want to become a doctor, you need to graduate from a medical school, do your residency, and, perhaps, complete your postgraduate studies. And only at the age of 30, you’ll become a practicing specialist, yet in a relatively narrow field.

What does that mean for AI? There is an enormous body of knowledge that doesn’t interlink very well. Each field contains an abnormal number of specifics. Machines must master all of that in order to be good at medicine. Simply put, it would take a lot of effort to cover all the fields with algorithms.

Credit: depositphotos.com

What else?

The second problem is connected with cross- and interdisciplinary interaction. If we want to introduce AI in medicine, healthcare professionals and computer scientists must communicate with each other, and that’s always a problem. Computer specialists are not good at medicine. They need someone who can explain it to them. Doctors, in turn, have little to no expertise in computer science.

People tend to have high expectations for AI. Some, especially managers and administrative staff, believe that AI is a one-stop solution for everything while practicing specialists are not prone to expect a lot.

It’s interesting that when we talked to doctors about AI, some of them mentioned their previous experience. And we couldn’t convince them that new and old technologies have nothing in common, except for names. Nevertheless, this unfortunate experience stops them from trying again.

And here comes another problem...

Credit: depositphotos.com

What is it?

To put it bluntly, most doctors don't trust AI. However, the same can be said about bankers, engineers, security experts, and so on. They all want to have control over everything. Plus, today’s AI systems are often poorly explained. We know the input and output but not what happens in between. And even machine learning specialists struggle to explain the process in all its details. As a result, we don’t trust AI, and it will take a lot of time and practice to prove this wrong.

Besides, everyone knows that it’s not that easy with algorithms, too. We can hardly access the stability of AI systems. Let’s say we collected data about patients in one region and used it to train an algorithm, and it runs smoothly. Yet we can’t be sure that it will do the same with data from other regions. What’s more, we can’t even tell whether the program will work well for different hospitals or not.

The thing is, we can’t possibly train algorithms on all data. It’s a long, complex, and insanely expensive practice. In such cases, specialists always take some of the information and it may differ radically from the general picture. One of the examples is programs that conduct some kind of skin diagnostic, and they perform differently depending on the skin color. So, there is a need to collect data from people with different skin colors, so that the program works effectively.

Credit: depositphotos.com

And we should also have a significant amount of data for further training, right?

Not only. Actually, lack of information is the fourth problem I'd like to mention. In medicine, the amount of data can never meet the needs of AI specialists.

It happens all the time, for instance, when we come to medical specialists and they say that they have loads of data, but we know that it’s still not enough for us.

They manually collect this information for months or even years. Here’s another example: when we take several dozens of diagnoses and can barely find examples for each among thousands of patients. What seems like a lot for medicine is actually close to nothing for machines.

We’re used to working with thousands and tens of thousands of examples, which is unlikely for medical specialists and even medical organizations. So, we have to figure out what to do with data for each situation.

Furthermore, data is extremely diverse. It is collected from different ultrasound and tomography machines and uses different formats and resolutions. It may not be a big deal for healthcare professionals but it is for artificial intelligence.

Credit: depositphotos.com

But it’s not enough to simply collect data. It should be interpreted. Roughly speaking, someone must explain the difference between a picture of a healthy person and a person suffering from a disease.

AI specialists call it markup. Marking is closely linked to the fifth problem. Basically, machine learning aims to restore the relationship between observed quantities and predictions. If there is no link, machines are powerless, as well. And the weaker the link gets, the less accurate predictions we obtain. As a result, we question medical patterns as they are not always as clear as we would like them to be.

We don’t always have proven patterns between medical interventions and patients’ recovery. There are also doctors who go beyond evidence-based methods.

However, evidence-based medicine faces a number of problems, too. Even doctors joke that if one patient had three doctors, then they’d have three different diagnoses. It's the same with markups. Three specialists give us three different markups. Moreover, the same person can mark an ultrasound one way and then after several months, another way.

It all comes to the fact that we try to train AI to see patterns that even we can’t see. It’s a challenging task, and obtained results are difficult to verify. One specialist could do a markup and train an algorithm. The program works well for this person but it may not for other people.

Credit: depositphotos.com

You named five reasons, what’s the sixth?

The final group of problems is what I call “organizational obstacles”. In order to introduce something like machine learning technologies, you need to organize this process, you need to do things for it to happen.

But as of today, even simpler things can’t be done because the healthcare system is very complex and excessively bureaucratized. For example, the idea to introduce a common medical database has been around for a while. It’s really cool: all information on patients is to be stored at a commonplace so it won’t matter where exactly a patient is. A person from one city can come to a hospital in another city, and the doctors will get all the information they need in a couple of clicks.

This will be beneficial from the standpoint of AI, as well, as such a system will become a great source of data. But although this has been a hot topic for years, and newspapers keep saying that such databases are to be introduced soon – there’s still no real progress with them. And introducing a common database is a task much easier than that of introducing AI methods.

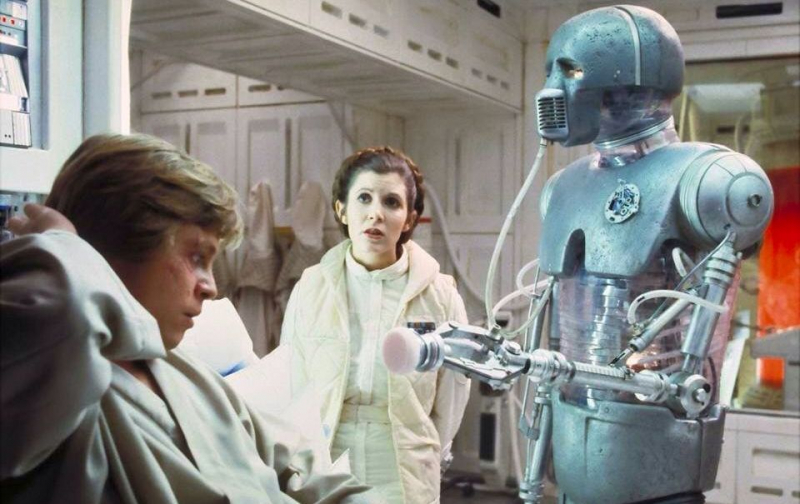

So you say that we won’t be seeing medical droids like those in Star Wars anytime soon?

Yes, in the foreseeable future, algorithms won’t replace doctors. They will just help.

From a socioeconomic standpoint, this may seem beneficial. We know that governments often reduce the number of doctors even when there’s nothing to replace them with. And here, we get an alternative. Nevertheless, it seems that from now on, we’ll be seeing doctors working alongside AI and not AI replacing doctors.

Apart from those already mentioned, there are also other factors that speak in favor of such a development. For example, there’s the issue of responsibility for a wrong diagnosis. Needless to say, even the best doctor or algorithm can’t give you correct diagnoses 100% of the time. There’s always a chance that they will make a mistake.

The developers won’t agree to take this responsibility. And if it would be doctors who’ll be held responsible, they won’t just blindly follow a machine’s advice. This is why we aren’t talking about robo-doctors but rather decision support systems. An algorithm gives advice, but it is the doctors who make the final decisions.

2-1B surgical droid. Credit: rpp.pe

This must be more comfortable for patients, as well?

Yes, there’s also the issue of patients’ trust in AI. We don’t have a clear understanding of how well patients’ would react to interacting with a machine rather than a person. This problem is still understudied, and we can only make assumptions. For example, based on how people interact with chatbots.

Then again, we see much progress in this regard. Some five years ago, interactions with chatbots kindled patients’ interest at best and provoked resentment in the worst case. Now, clients have more confidence in this technology. They are ok with using chatbots and not cross checking the results with a real person.

On the other hand, client support isn’t a very sensitive issue in comparison to healthcare. For example, we can’t really tell how good AI is at consoling patients or persuading them to take specific measures. Writing out a prescription is one thing, but getting a patient to follow it may be a lot more difficult. So it’s hard to say whether AI can compete with a real doctor in this regard.

So we can assume that machine learning will continue to be introduced in the medical field. Machines will get to do more routine or simple actions that call for precision. But all of that will take place under the supervision of real doctors.