What is research reproducibility and why does it have such a big role today?

Alexander Kapitonov: Today, the IEEE scientific community has reached the understanding that there are too many scientific articles, sometimes even those cited in significant sources and having undergone a number of checks, that contain information that is false. For example, we all remember the resounding scandal relating to the quick blood testing technology by the company Theranos (Theranos was a private corporation dealing in healthcare technologies. Initially hailed as revolutionary innovators, its reputation was majorly tarnished because of its false claims on developing blood testing solutions that required very little quantities of blood – Ed. note).

The scientific community started to realize that each article has to be accompanied not only by a description of a mathematical model used, but also by an upload of the entire body of data the author worked with in the course of their research, else it would not be approved for publication. This is what is called research reproducibility: when everyone has the means and the opportunity to recreate an experiment. I myself have many times been in the situation where I was unable to reproduce the actions that underlied the research in question, and the latter’s results usually turned out to be unoperational in practice. There is a whole range of problems that are not taken into account and, consequently, make research futile. There is a common desire now to change this state of affairs.

Would it be correct to say that the concept of research reproducibility has a special application in robotics?

Fabio Bonsignorio: We should start by saying that robotics is in general very different from other scientific fields. Though it has been in existence for over 50 years, it is still a very young field. You could say that it was the recent achievements in this field that actually made it a science. To a large extent, this success should be attributed to the development of interfaces designed for people, with prosthetic devices being one example. In most cases, robotics is seen as a science about humans’ abilities to develop their physical system, to bring it closer to perfection. This is the first reason that singles this field out from others.

Another reason pertains to how the developments are applied. As it is a very young field, people got used to seeing robots do some very bizarre things. This is something that, for example, robots created by the company Boston Dynamics are widely known for: there are videos of them drawing and the suchlike and the public believes them and draws inspiration from them. But when we talk about scientific progress or more serious applications for these results, we have to have a clear understanding of how many times our robot would be able to reproduce its actions over and over again. Statistics is crucial here. In the majority of cases, robots can’t reproduce their actions for many times over and over again. In a 2018 interview, Marc Raibert, the founder of Boston Dynamics, expressed his concern that people today expect too much from robots.

We remember from our school years that in science, for example in physics, we operate well-known formulas and thus can emulate the experiments conducted by the scientific greats. This is the value of the scientific method, and the entire scientific progress, which has so much impact on the modern quality of life, was built on the scientific method. In its turn, the method implies that a researcher has a study object, pilots an idea, establishes that this idea works, and others can then test the results they obtained. If I can recreate your results, this means that I can continue the development of this field, working in this specific direction. This is the idea at the core of scientific methodology.

But for some fields, robotics included, this process is not so clear-cut. The main problem here is that the community didn’t develop a common practice for reproducing scientific results. This process has only just started to be fleshed out.

How do scientists and developers in the field of robotics react to the fact of being required to make all their data set public?

Fabio Bonsignorio: We started to discuss this question about ten years ago. When we were creating the European Robotics Association, euRobotics, with the aim to initiate this discussion, this was a concern for almost everyone in the field, and everyone shared the willingness to bring about research reproducibility in robotics. As the majority of scientific materials are published online nowadays, providing the whole data set is no longer a problem.

After much discussions and workshops, which we organized on the basis of our community, the journal IEEE Robotics and Automation (of which Fabio Bonsignorio is Associate Editor – Ed. note) published the first fully reproducible scientific paper, and this happened only this year. This proved to be one of the journal’s most successful issues. Making a research reproducible calls for a significant amount of time and effort: it took us four years to be able to start publishing such papers.

It is important that we don’t present these works as purely academic research: we want these results to be put to practice and become more flexible to suit different sets of conditions. We focus on cooperation between researchers: it holds interest not only from the scientific standpoint, but also from the economic one.

You are working on several joint projects this summer. Can you tell us what these projects are, and how they are connected to the topic of research reproducibility?

Fabio Bonsignorio: We have a lot of projects revolving around a multi-agent technology for mobile robots which can be used both on land and on water, and also the blockchain technology. These are long-term initiatives, and our strategy is to develop solutions that would allow people to work in friendly collaboration with robots.

Right now, we are working on a project where we use drones to monitor various environments; namely, we are interested in water environments. The project involves several flying and floating drones. The element of reproducibility here is constituted by the fact that all the data drones operate in the course of their work get to the public access domain thanks to the blockchain technology. Blockchain mechanisms are also used for storing the published data, allowing to keep the measurement’s results unchanged. In other words, we display our research results through a reliable system; in this case, blockchain serves as a guarantee that the data is authentic. The main focus for us, though, is providing the data set using which everyone would be able to recreate our experiment.

Alexander Kapitonov: Ideally, this project should result in helping set up a system for monitoring the condition of water in the Neva River. By the way, there is a similar project that was implemented as part of a Master’s/PhD grant under the guidance of Igor Pantyukhin, an assistant of ITMO University’s Faculty of Infocommunication Technologies; it also serves the purpose of environmental monitoring, with the only exception that here, the drones focus on searching points of ignition in forest areas. In that case, only flying drones are used, tasked with not only detecting the ignition points but also locating people and cars in these areas. At one of pilot tests, we managed to increase the specialized services’ reaction speed threefold. Usually, the services only know about the direction of the fire, but now there is an opportunity to accurately locate its whereabouts. The project has already come to a close, and today we present its results at various events.

You are also collaborating with your colleagues from MIT…

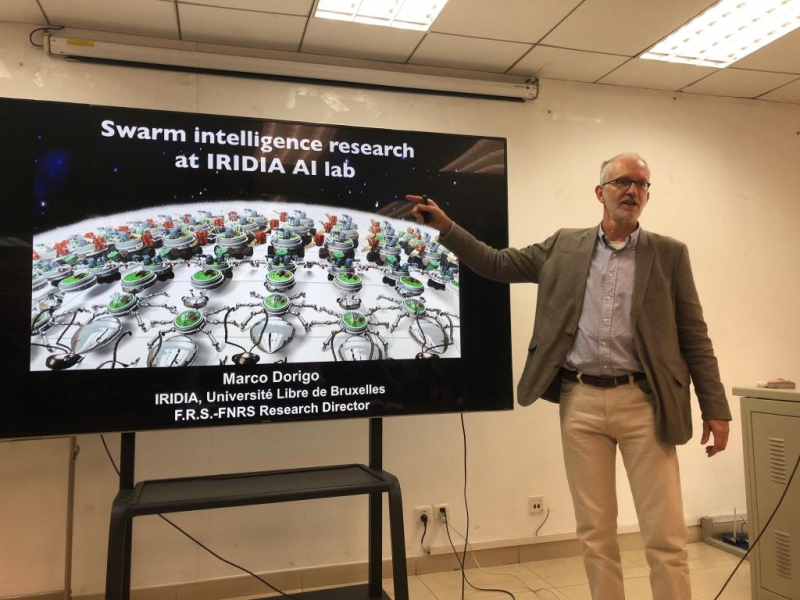

Alexander Kapitonov: Yes, this cooperation is connected to the work we do on the application of blockchain technologies for robotic systems. Namely, we describe how blockchain mechanisms can be used for establishing communications between robots. To that end, we have developed long-term cooperation with MIT Media Lab; our main partner is Eduardo Castello Ferrer, a holder of a Marie Curie Fellowship Grant. He is currently working in collaboration with no less prominent experts, professors Alex Pentland and Marco Dorigo, that is aimed at studying the potential of the combination of robotics and blockchain for the creation of security, behavior and business new models for distributed robotic systems, so our research interests coincide.

Is your cooperation with your MIT colleagues focused on a specific project?

Alexander Kapitonov: We work not on a specific project but on the development of an entire field by actively promoting the use of blockchain solutions for communication between robotic systems. We organize symposiums and workshops together and publish joint research papers in this field.

Fabio Bonsignorio: Blockchain is great for storing data and establishing a guarantee for its intactness and consistency. But parallel to that, we also promote blockchain as a field intended for economic interaction of automatic systems. The simplest example from the future would be a situation where an automated Tesla truck arrives at an automated Amazon warehouse, and there is a need to transfer the property rights on the goods after they have been dispatched. As one of the most transparent and reliable solutions for these tasks, blockchain can play a key role in this process.

In your last interview with ITMO.NEWS, you mentioned that machine learning, big data and computer vision would give industrial enterprises the opportunity to communicate with each other, expedite the task-setting process and independently solve these tasks. Does this signify a new stage in production and economics?

Fabio Bonsignorio: Machine learning, blockchain, artificial intelligence are just like Lego blocks: you can apply them in the field of production, where trust is very important, and through this change it altogether. The reality is that an enterprise or a service supplier has to be as adaptable as possible, they need this kind of flexibility. Technologies like IoT, machine learning and AI are just the option to ensure more flexibility and room for maneuver. We are capable of rebuilding the whole industry, and this is a massive opportunity for European countries. Companies, societies and countries can jump on this bandwagon, and this will bring about a sea change in economics. And research reproducibility is crucial in this transition.

Alexander Kapitonov: Science will allow economics to rise to a new level. Today, there are many examples of people moving towards collaborative consumption, the so-called sharing economy; this means lower costs for goods and more effective ways for using them. And it is the scientific community that is now advancing the idea that despite the huge amounts of scientific journals, fields and solutions, it is really difficult to find mechanisms out there that would actually work in practice. There is no way that you can familiarize yourself with all the research there is today. And this leads us back to the value of reproducibility.

I try to use the concept of reproducibility in our educational process because I think that it needs to be more open and transparent. Reproducibility and transparency are two new and very important trends of today. Students must publish their reports and results of their lab work, offering everyone to check or recreate their experiment. This improves the quality of work of both students and educators.

What will be your main research focuses in the following months?

Fabio Bonsignorio: There are some ideas on introducing mobile robots into the collaborative consumption model. But as of now, our main focus is on environmental monitoring and mobile robot networks, cyberphysical systems, and, of course, reproducibility.

Alexander Kapitonov: Sharing the full data sets as part of research results is our number one priority idea that embodies a whole new type of thinking. It is worth noting that this approach has long been implemented in the robotics community. For instance, 12 years ago the developers of the Robot Operating System made it an open-source project, and all the results are published in open access domains such as repositories and GitHub, the largest web service for IT projects storage.

The new generation is far less interested in competing, it’s the last thing on people’s minds now. I hope that the next step will be that many research groups will start publishing their results together with the program code and source data, thus helping each other and the development of their scientific field at large. Synergy in research is something that will propel humanity forward.