Nanite and virtualized geometry

To better understand the innovative nature of Nanite, let’s briefly explore the limits of the current generation of game engines. The majority of them (with Dreams being a rare exception) operate within the paradigm of vertices, edges and polygons which serve as the most primitive level of any geometrical model. Striking the right balance between visual fidelity of game assets and the number of polygons has always been a crucial and nontrivial task for any developer. And while bare polygons are not the only thing affecting rendering performance, using extremely high polygon counts can make an application absolutely unusable in terms of real-time interaction. Modern game engines can handle up to a few million triangles (the most simple form of polygons). In some cases, this number can be raised up to ten or twenty million depending on the lighting scenario and other conditions of the scene. Nanite can do billions. This fact alone makes it a few orders of magnitude more powerful than any solution on the market at the present moment.

The author of the technology, Brian Karis, a senior graphics programmer at Epic Games, stated that it took him "over a decade of personal research [and more than] three years of full time work" to deliver such a solution. However, the core concept of the technology is not new. It goes back to 1980 and the REYES (Render Everything You Ever Saw) technique developed by Lucasfilm’s Graphics Group, the precursor of today’s Pixar. What makes Nanite unique is its implementation.

While Epic Games evades revealing details, Brian Karis has confirmed that his approach is inspired in part by two papers (paper 1 & paper 2). Both of them are focused on virtualized geometry which, in simple terms, allows us to reconstruct complex geometry meshes from two-dimensional RGB maps at runtime. Further discussion on this topic can be found on the author's blog.

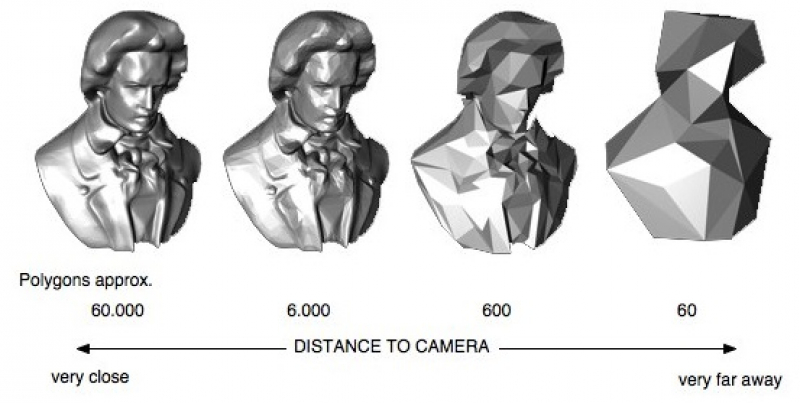

However, the most important part is that, according to the demo, "no baking of normal maps, no authored LODs" are needed. This statement refers to an optimisation process which ideally should be applied to every model meant to be used in a real-time application. It usually includes stripping an asset of every small geometrical detail and creating an LOD Chain, which is basically a few copies of a low-poly model with even further regression in detail.

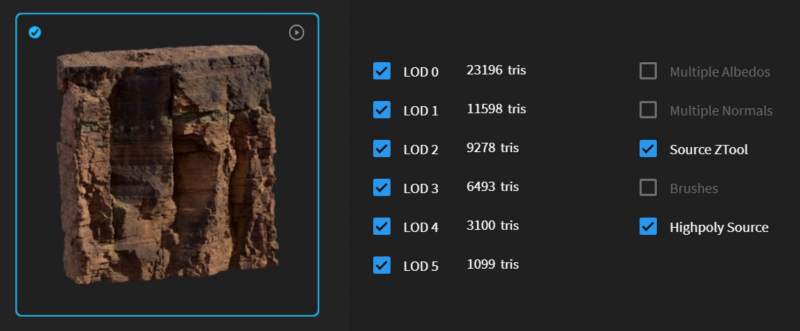

LODs are swapped in runtime based on the distance between the player and the model, while the most detailed variant, typically called LOD0, is shown only in the immediate proximity of the player. Let’s take a closer look at one of the demo’s assets as an example. It is a photoscanned geometry provided by Quixel Megascans, the world’s leading company in photogrammetry, recently acquired by Epic Games.

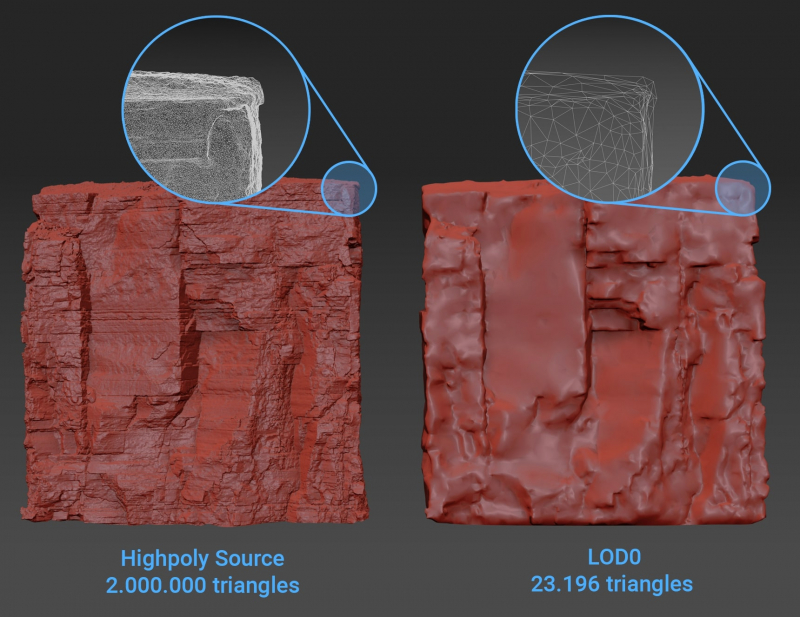

Quixel allows its customers to download different variations of each asset, including the aforementioned LOD chain (ranging from 23.196 to 1099 triangles for the asset on the screenshot), a high-poly source (around 2 million triangles) and a source ZTool, which stores photoscanned geometry in its original state (almost 17 million triangles). An offline renderer used for, say, film production would effortlessly handle a high-poly variant and even the most detailed source geometry could be used for some extremely close shots. A game engine, on the other hand, is able to make use of low-poly assets only. However, as we can see, in this case even the most complex LOD0 contains only ~1.15% of the high-poly source detail and ~0.04% of the original ZTool source detail.

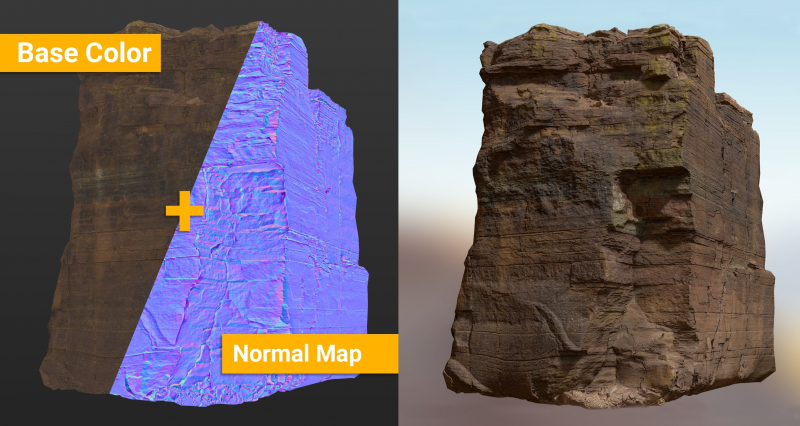

This tremendous loss in geometric complexity can be partially regained by transferring data from a high-poly model to its low-poly companion. That’s where normal maps come into play. The concept of normal mapping is pretty straightforward. An offline rendering algorithm checks every part of a low-poly model against its high-poly origin and renders or “bakes” every missing detail into a 2D RGB normal map which can be later used by a game engine in an attempt to restore the look of the high-poly source.

However, LOD creation and normal map baking are semi-automated processes which take a significant amount of time and manual work and, as a result, shift the focus of digital artists from artistic tasks to mundane technical ones. They also come with a few drawbacks. Since normal mapping is just a trick that makes light behave in a specific way but doesn’t produce any real geometrical details, it often fails at grazing angles and close inspection. LOD swapping between different models is usually noticeable and sometimes the engine just can’t load the necessary assets fast enough when the player traverses the scene at high speed.

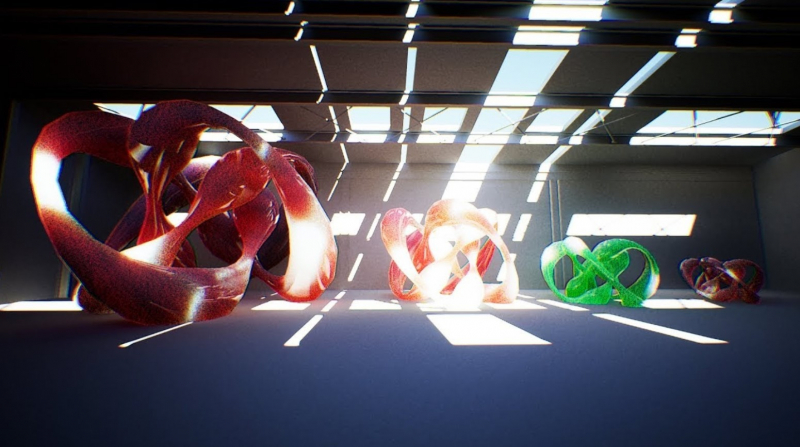

Nanite promises to leave LODs and normal maps in the past, allowing artists to bring the original high-poly assets straight into the engine. There’s no need to fake details or try to fit into tight polycount budgets anymore. The prospect sounds revolutionary, but the question is, how much space and speed is needed to accommodate and stream such data? The demo heavily relies on PlayStation 5’s SSD, which leverages 5.5 Gb/s bandwidth outmatching the majority of modern PC’s SSDs running at 0.5-3 Gb/s speed. Will it be necessary to use extremely fast terabyte SSDs to run applications with hundreds of such high quality assets (for instance, the one discussed above is more than 600MB) on PC? Graham Wihlidal, a senior rendering engineer at Epic Games, has already partly addressed this issue, stating that “this tech is meant to ship games not tech demos, so download sizes are also something we care very much about.”

Global illumination

Global illumination, also known as indirect lighting and often shortened as GI, historically has been one of the toughest challenges for real-time computer graphics. To understand the underlying concept of GI, let’s take a look at a real-world example which can then be translated to the UE5 demo.

Imagine yourself standing in the middle of a pitch black room. The sun is shining directly outside, but is completely blocked by blackout curtains. The darkness is so impenetrable that you can’t see your own hands stretched out in front of you. This is due to the fact that no lighting, be it direct or indirect, enters your room. So you decide to remedy this by slightly opening your blackout curtains, creating a tiny slit between them. The light immediately fills the room. The most notable change is a shining bright line of light on your floor stretching from the gap in the curtains. This is a direct light contribution, meaning it’s the place where light rays have hit the surface of your room for the first time. It’s usually called the first bounce.

But if the first bounce was also the last, then you still wouldn’t be able to see anything around, your own hands included, unless you moved them straight under the line of direct light. You’d probably find this situation hard to imagine, because you would instinctively expect the room to be at least moderately lit by this moment. And rightly so, since this is exactly what global illumination does. Essentially, light rays would travel through your room, starting from the place of the first bounce and then bouncing a number of times off different surfaces and objects until finally reaching your eyes, thus making the surroundings visible to you.

Speaking in simple terms, we can define indirect lighting in real-time graphics as the contribution of all light bounces following the initial (or sometimes called zero) bounce. But, as it often is the case, the devil is in the details. When light hits a surface, a number of things can happen: it can be reflected, refracted, partially absorbed and/or travel through the medium of the object to leave it at some point under another angle. This, in turn, causes multiple phenomena. For example, if light rays are reflected in different directions, chaotically, then they would render the surface opaque to our view. On the other hand, should they be scattered in a more parallel manner, they would form a readable reflection on the surface, in the most extreme case making it mirror-like. If light rays are travelling through the medium of an object, then this object would have a degree of transparency, which is a notoriously hard case for game engines. There are other less common cases, such as SSS (subsurface scattering) and translucency, which are equally important for the final result but are way out of the scope of this article.

An ideal GI rendering solution must take all the aforementioned factors into account. Most offline renderers do so by utilizing ray tracing and path tracing techniques to emulate how light behaves in the real world via approximated optical and physical laws. However, the idea of tracing thousands and millions of light rays per frame is generally incompatible with real-time applications due to its immense computational cost. Only Nvidia’s recent hardware-accelerated ray tracing solutions allow some of these offline rendering methods to be used in real time, being arguably the most optimal solution for global illumination in game engines. Unfortunately, they come at a very high price and are not yet powerful enough to be used in the majority of cases, so most game engines have to fall back on other options to achieve any kind of real-time lighting. And that’s where things get interesting with Lumen.

Lumen

Lumen is a three-stage algorithm that uses different lighting techniques for each level of object remoteness from the player. One thing worth mentioning, though, is that all three parts of Lumen are actually already available in Unreal Engine 4 as separate solutions with different names. Let's take a closer look at each of them.

The first stage of Lumen is VXGI, which stands for Voxel Global Illumination. The technology was first presented by Nvidia and was later officially adopted by Unreal and other game engines. Its basic idea is to voxelize a scene (or its part), by encompassing it in a grid of box volumes called voxels, therefore creating a simplified and structured scene representation. This approach makes it possible to partially decouple real geometry kept in voxels from the lighting setup, making it easier to compute global illumination. While the voxelized version of a scene is completely hidden from the player’s eyes, it certainly looks almost Minecraft-ish for lighting algorithms, which is far from the geometrical complexity of the real world. However, Lumen applies this technique only for the most distant objects, which are not supposed to attract a lot of attention.

The second part of Lumen deals with the assets that are located relatively close to the player. It is called SDF or Signed Distance Fields and works by storing the distance to the nearest surface of an object in a volume texture. In other words, it slices a 3D mesh into a set of cross-sections and stores them in a 2D texture map, virtually making a computed tomography scan of an object. This kind of data is usually utilized for physical simulations and rarely used by game engines to describe any form of lighting, making even the current generation of Unreal Engine unique in this way. To achieve shadowing effects, Unreal creates a global distance field volume which follows the player and caches all surrounding objects in low-resolution.

The third and the most precise stage is named SSGI (Screen Space Global Illumination). This method is quite new, especially compared to the previous two, and is designed to cover the nearby surroundings in the current field of view. As the name implies, SSGI calculates global illumination by actually tracing rays in screen space, meaning that all objects which are partially or completely obscured from view or just not present in the frame can’t faithfully contribute to or receive GI. The current state of technique is meant to be used only as a complementary solution in conjunction with other GI methods.

What to expect from Unreal Engine 5

Despite the availability of the described techniques, they are very likely to evolve and change in the next generation of Unreal Engine. There’s no doubt that the level of quality shown in the demo couldn’t be achieved without a serious refactoring of current GI solutions. Furthermore, Nanite and Lumen, being the two major innovations, must be tied together quite effectively, since most contemporary GI algorithms aren’t typically supposed to work with such huge numbers of polygons. However, the question of performance remains open: the demo runs at 30 frames per second in the 2560 x 1440 resolution with temporal upscaling (meaning that the resolution was sometimes dropped lower to maintain a stable framerate and was then artificially scaled back in runtime) even on the extremely powerful PlayStation 5 hardware. It is also supposed to run on the upcoming Xbox Series X and could show “pretty good” results on the RTX 2070 Super according to the Epic Games CTO Kim Librer.

The first chance to experience these new technologies will arrive in early 2021 with the preview release of UE5, followed by the full version later the same year. Complete tech documentation is also said to be released in the near future.

Written by Pavel Vorobyev.